Own Your AI. Keep Your Data.

Run open-source LLMs inside your network. Private, fast, and under your control.

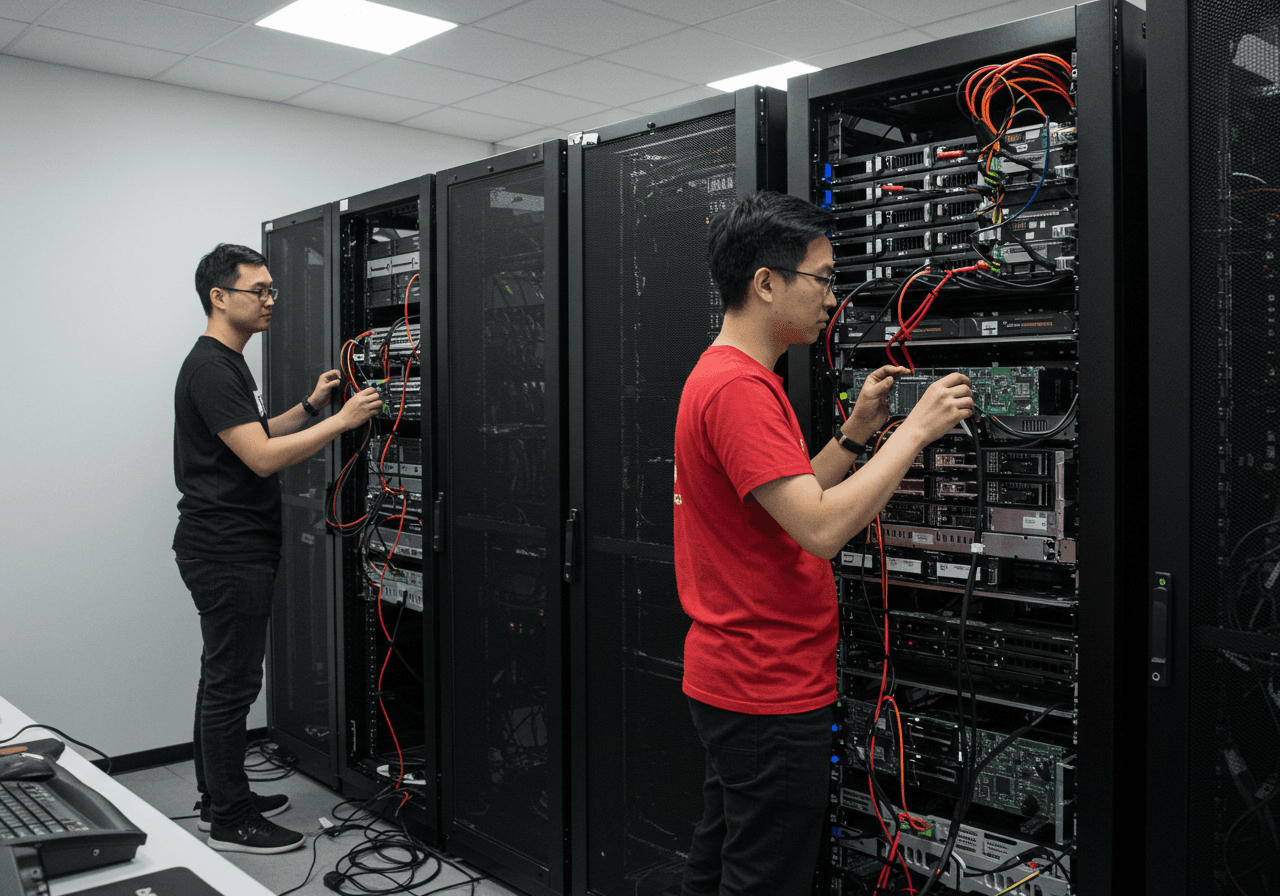

Total Data Sovereignty

Guarantee data privacy and compliance by keeping everything inside your network.

Instant Integration

Your developers can use their favorite tools by simply changing an API endpoint.

Trusted in Sydney, Melbourne, Brisbane, and Newcastle by

Run where the auditors are happy

VPC, private cloud, or on-prem with air-gap.

Zero data leaves

Sensitive info never leaves; tick SOC 2/HIPAA boxes.

Performance local

Cut network hops and jitter for more reliable responses.

Drop-in integration

Your developers can use the tools they already know and love. Simply swap a single API endpoint.

No learning curve

Teams ship features faster because nothing gets rewritten

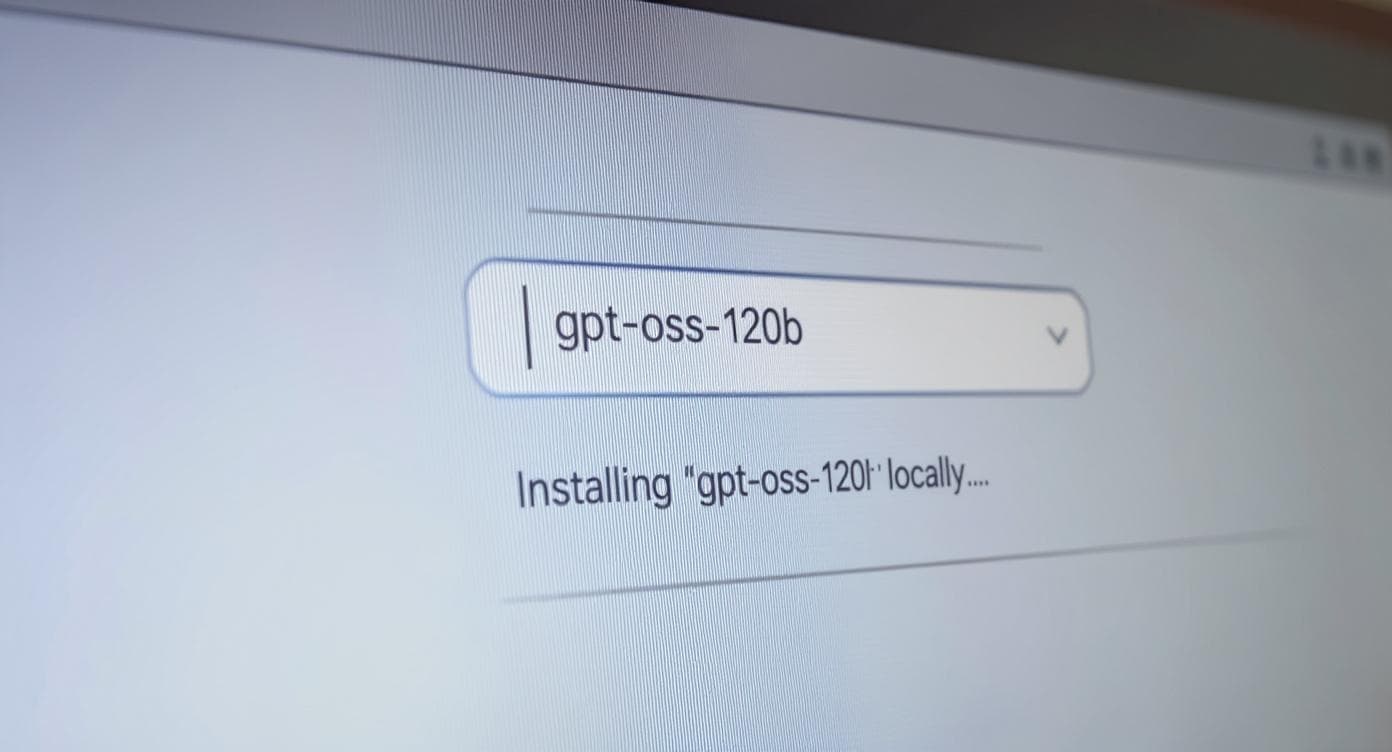

Model freedom

Swap open-source models (OpenAI, Qwen, etc.) without changing your app.

From Theory to Reality

Offline AI in Action: A Developer's Guide to Local LLMs

Discover why running large language models locally is a game-changer for data privacy, security, and speed. In this session, SSW Solution Architect Jernej Kavka provides a hands-on guide to the essential tools and techniques, culminating in a live demo of an offline AI copilot for home automation.

Tech wisdom from the trenches

At SSW, we’ve been knee-deep in projects for years: debugging giant solutions, fixing game-stopping bugs, and staying on the cutting edge. No guesswork, just proven tips that help you build better software.