Do you know the potential security risks of using ChatGPT?

Last updated by Lewis Toh [SSW] 9 months ago.See historyChatGPT is an AI language model developed by OpenAI that is considered generally safe due to the implementation of various security measures, data handling practices, and privacy policies. However, users should be aware of potential risks and follow best practices when using the platform.

OpenAI is a third-party platform and you should not make assumptions about how they process or retain data. They will also likely be able to change their policies from time to time so even if something is stated today it might be different tomorrow.

You should never submit any confidential information into ChatGPT. Specifically, you should never submit any information which identifies or could potentially be used to identify an individual (E.g. name, address, date of birth, phone number etc.)

Key points:

-

Security measures by OpenAI:

- Encryption

- Access controls

- External security audits

- Bug bounty program

- Incident response plans

-

Responsible data handling practices by OpenAI:

- Transparency about data collection purposes

- Data storage and retention policies (30 days)

- Controlled data sharing with third parties

- Compliance with regional data protection regulations

- Respecting user rights and control over their data

-

ChatGPT is not confidential:

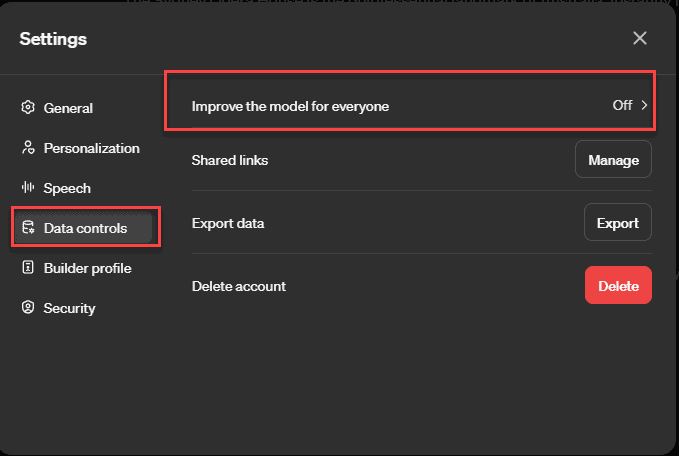

- All conversations are used as training data by default, but this can be turned off

- Users should avoid sharing sensitive information

-

Potential risks of using ChatGPT:

- Data breaches

- Unauthorized access to confidential information

- Biased or inaccurate information generation

-

Best practices for using ChatGPT:

- Do not share or submit sensitive or confidential information on ChatGPT, ever

- Review privacy policies of platforms using ChatGPT

- Use anonymous or pseudonymous accounts

- Monitor data retention policies

-

Current regulations:

- No specific regulations for AI systems like ChatGPT

- Compliance with existing data protection and privacy regulations (e.g., GDPR, CCPA)

- Proposed AI Act could become the first comprehensive regulation for AI technologies

Always exercise caution when using ChatGPT and avoid sharing sensitive information, as data retention policies and security measures can only provide a certain level of protection.