Rules to Better Azure - 41 Rules

The Azure cloud platform is more than 200 products and cloud services designed to help you bring new solutions to life—to solve today's challenges and create the future. Build, run, and manage applications across multiple clouds, on-premises, and at the edge, with the tools and frameworks of your choice.

Need help with Microsoft Azure? Check SSW's Azure consulting page.

Getting application architecture right is super hard and often choosing the wrong architecture at the start of a project causes immense pain further down the line when the limitations start to become apparent.

Azure has 100s of offerings and it can be hard to know what the right services are to choose for any given application.

However, there are a few questions that Azure MVP Barry Luijbregts has come up with to help narrow down the right services for each business case.

Video: How to choose which services to use in Azure | Azure FridayThere are 2 overarching questions to ask when building out Azure architecture:

1. How do you run the app?

Azure offers heaps of models for running your app. So, to choose the right one you need to break this question down into 3 further parts:

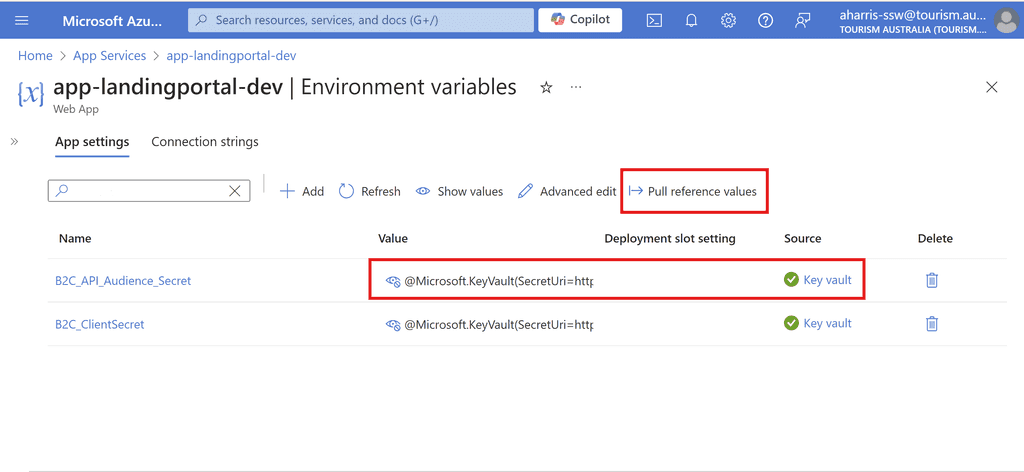

1.1 Control - How much is needed?

There are many different levels of control that can be provided. From a VM which provides complete control over every aspect, to an out-of-the-box SaaS solution which provides very little control.

Keep in mind, that the more control you have, the more maintenance will be required meaning more costs. It is crucial to find the sweet spot for control vs maintenance costs - is the extra control gained actually necessary?

-

Infrastructure as a Service (IaaS)

- Consumer responsible for everything beyond the hardware

e.g. Azure VM, AKS

-

Platform as a Service (PaaS)

- Consumer responsible for App configuration, building the app and server configuration

e.g. Azure App Service

-

Functions as a Service (FaaS) -- the Logic

- Consumer responsible for App configuration and building the app

e.g. Azure Functions, Azure Logic Apps

-

Software as a Service (SaaS)

- Consumer responsible for only App configuration

Figure: The different levels of control 1.2 Location - Where do I need the app to run?

Choosing where to run your app

- Azure

- On-Premises

- Other Platforms e.g. AWS, Netlify, GitHub Pages

- Hybrid

1.3 Frequency - How often does the app need to run?

Evaluating how often an app needs to run is crucial for determining the right costing model. A website or app that needs to be available 24/7 is suited to a different model than something which is called infrequently such as a scheduled job that runs once a day.

There are 2 models:

-

Runs all the time

- Classic (Pay per month) e.g. Azure App Service, Azure VM, AKS

-

Runs Occasionally

- Serverless (Pay per execution) e.g. Azure Functions, Azure Logic Apps

2. How do you store your data?

Azure has tonnes of ways to store data that have vastly different capabilities and costing models. So to get it right, ask 2 questions.

2.1 Purpose - What will the data be used for?

The first question is what is the purpose of the data. Data that is used for everyday apps has very different storage requirements to data that is used for complex reporting.

So data can be put into 2 categories:

-

Online Transaction Processing (OLTP)

- For general application usage e.g. storing customer data, invoice data, user data etc

-

Online Analytical Processing (OLAP)

- For data analytics e.g. reporting

2.2 Structure - What type of data is going to be stored?

Data comes in many shapes and forms. For example, it might have been normalized into a fixed structure or it might come with variable structure.

Classify it into 2 categories:

- Relational data e.g. a fully normalized database

- Unstructured data e.g. document data, graph data, key/value data

Example Scenario

These questions can be applied to any scenario, but here is one example:

Let's say you have a learning management system running as a React SPA and it stores information about companies, users, learning modules and learning items. Additionally the application administrators can build up learning items with a variable amount of custom fields, images, videos, documents and other content as they want.

It also has a scheduled job that runs daily, picks up all the user data and puts it into a database for reporting. This database for reporting needs to be able to store data from many different sources and process billions of records.

Q1: The App - Where to run the app?

Control - The customer doesn't need fine tuned control but does need to configure some server settings for the website.

Location - The app needs to run in Azure.

Frequency - The scheduled job runs occasionally (once a day...) while the website needs to be up all the time.

A1: The App - The best Azure services are

- An Azure App Service for the website, since it is a PaaS offering that provides server configuration and constant availability

- An Azure function for the scheduled Job, since it only runs occasionally and no server configuration is necessary

Q2: Data - How to store it?

Purpose - The data coming in for everyday usage is largely transactional while the reporting data is more for data analytics.

Structure - The data is mostly structured except for the variable learning items.

A2: Data - The best Azure Services are

- Azure SQL for the main everyday usage

- CosmosDB for the variable learning items

- Azure Synapse for the data analytics

-

Azure is a beast of a product with hundreds of services. When you start learning Azure, it can be overwhelming to think about all the different parts and how they fit together. So, it is crucial to know the right tools to make the process as pain free as possible.

There are heaps of great tools out there with differing pricing models and learning styles.

YouTube - $0

YouTube is a great resource for those who love audio-visual learning. It is completely free and there are heaps of industry experts providing content. Some of the best examples are:

- John Savill

- Azure for Everyone (Adam Marczak)

- DevOps for Everyone (Microsoft)

- SSW TV

Microsoft Learn - $0 - Recommended ✅

Microsoft Learn is the best free tool out there. It provides hundreds of practical tutorials, heaps of video content and even lets you spin up little Azure sandboxes to try out Azure functionality. It is officially supported by Microsoft and so is one of the best ways to get ready for certifications.

Online Learning Platforms - $100 - 500 AUD

Online learning platforms provide high quality technical training from your browser. These courses include lectures, tutorials, exams and more so you can learn at your own pace.

Some of the options:

- Udemy - $~100 AUD - Budget Option, no instructor vetting

- LinkedIn Learning $~300 AUD

- PluralSight - $~500 AUD - Gold Standard ⭐

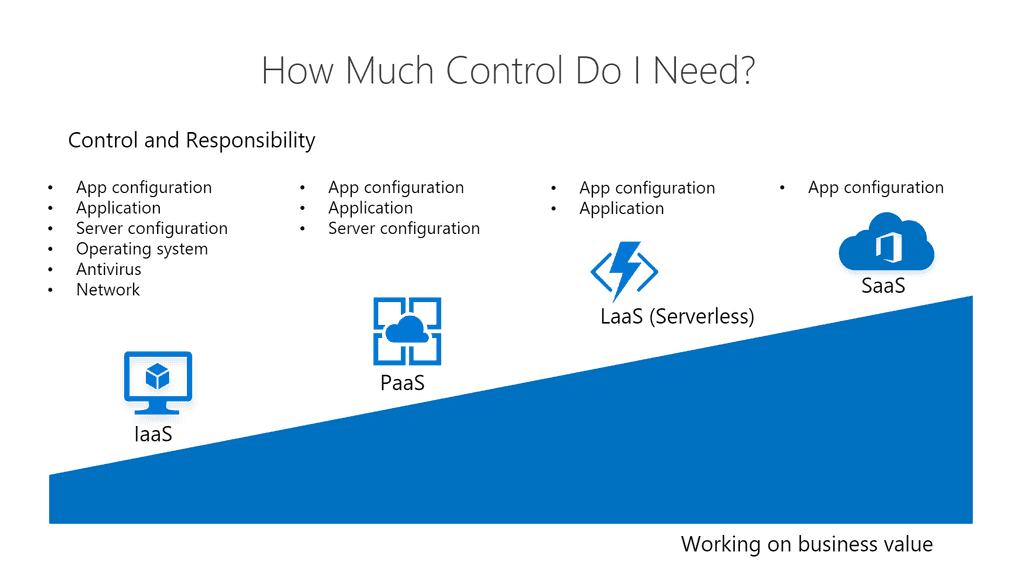

Whether you're an expert or just getting started, working towards gaining a new certification is a worthwhile investment.

Microsoft and GitHub certifications verify your skills and knowledge in a variety of technologies, instilling trust with clients and employers.

Microsoft Certifications

Microsoft provides numerous certifications and training options to help you learn new skills, fill technical knowledge gaps, and prove your competence.

Certifications are broken up into Fundamentals, Role-based (Intermediate & Expert), and Specialty. Check the Become Microsoft Certified poster for details of exams required for each of the certifications, or browse all certifications here.

Figure: Get the poster to see Microsoft's certifications

Focusing on Azure certifications, here are some good options:

Fundamentals

If you're just getting started, take a look at Microsoft Certified: Azure Fundamentals.

Earn this certification to prove you have a foundational knowledge of cloud services and how those services are provided with Microsoft Azure. You will need to pass the exam AZ-900: Microsoft Azure Fundamentals.

If AI is more your style, have a look at Microsoft Certified: Azure AI Fundamentals

Earn this certification to prove you have foundational knowledge of AI concepts related to the development of software and services of Microsoft Azure to create AI solutions. You will need to pass the exam AI-900: Microsoft Azure AI Fundamentals.

Associate

If you're already working with these technologies, you can skip the fundamentals and go for associate level certifications - see the role-based intermediate certifications in the poster above. Here are some examples:

For devs: Microsoft Certified: Azure Developer Associate

Earn this certification to prove your subject matter expertise in designing, building, testing, and maintaining cloud applications and services on Microsoft Azure. You will need to pass the exam AZ-204: Developing Solutions for Microsoft Azure.

For SysAdmins: Microsoft Certified: Azure Administrator Associate

Earn this certification to prove you understand how to implement, manage and monitor an organization's Azure environment. You will need to pass the exam AZ-104: Microsoft Azure Administrator.

Expert

Eventually, all rock star developers and solution architects should set their sights on expert exams.

Microsoft Certified: Azure Solutions Architect Expert

Earn this certification to prove your subject matter expertise in designing and implementing solutions that run on Microsoft Azure, including aspects like compute, network, storage, and security. Candidates should have intermediate-level skills for administering Azure. You will need to pass the exam AZ-305: Designing Microsoft Azure Infrastructure Solutions, with a prerequisite of AZ-104 (see above).

Microsoft Certified: DevOps Engineer Expert

Earn this certification to prove your subject matter expertise working with people, processes, and technologies to continuously deliver business value. You will need to pass the exam AZ-400: Designing and Implementing Microsoft DevOps Solutions, with a prerequisite of either AZ-104 or AZ-204 (see above).

GitHub Certifications

GitHub also have a number of certifications to help you master aspects of the platform - see the certifications here.

Figure: Current GitHub certifications Foundations

If you're just starting out in GitHub, go for the GitHub Foundations exam. This exam measures entry level skills with GitHub basics like repositories, commits, branching, markdowns, and project management.

All Other Exams

All other exams are useful, depending on your focus/interest in GitHub.

For a DevOps focus, try the GitHub Actions exam. This exam measures your ability to accomplish the following technical tasks: author and maintain workflows; consume workflows; author and maintain actions; manage GitHub Actions for the enterprise.

If AI is your thing, check out the GitHub Copilot exam. This exam has a heavy emphasis on understanding GitHub Copilot plans and features, how the AI tool uses data, developer use cases, and privacy fundamentals and context exclusions.

Preparing for exams can involve a lot of work, and in some cases stress and anxiety. But remember, you're not in school anymore! You've chosen to take this exam, and no one is forcing you. So just sit back and enjoy the journey - you should feel excited by the new skills you will soon learn. If you want some great advice and tips, be sure to check out Successfully Passing Microsoft Exams by @JasonTaylorDev.

Good luck!

To help you out, here is a list of the top 9 Azure services you should be using:

- Computing: App Services

- Best practices: DevOps Project

- Data management: Azure Cosmos DB (formerly known as Document DB)

- Security: Azure AD (Active Directory)

- Web: API Management

- Automation: Logic Apps

- Automation: Cognitive Services

- Automation: Bots

- Storage: Containers

Watch the video

More details on Adam's Blog - The 9 knights of Azure: services to get you started

The goal of a modern complex software project is to build software with the best software architecture and great cloud architecture. Software developers should be focusing on good code and good software architecture. Azure and AWS are big beasts and it should be a specialist responsibility.

Many projects for budget reasons, have the lead developer making cloud choices. This runs the risk of choosing the wrong services and baking in bad architecture. The associated code is hard and expensive to change, and also the monthly bill can be higher than needed.

The focus must be to build solid foundations and a rock-solid API. The reality is even 1 day of a Cloud Architect at the beginning of a project, can save $100K later on.

2 strong developers (say Solution Architect and Software Developer)

No Cloud Architect

No SpendOpsFigure: Bad example of a team for a new project

2 strong developers (say Solution Architect and Software Developer)

+ 1 Cloud Architect (say 1 day per week, or 1 day per fortnight, or even 1 day per month) after choosing the correct services, then looks after the 3 horsemen:- Load/Performance Testing

- Security choices

- SpendOps

Figure: Good example of a team for a new project

Problems that can happen without a Cloud Architect:

- Wrong tech chosen e.g. nobody wants to accidentally build and need to throw away

- Wrong DevOps e.g. using plain old ARM templates that are not easy to maintain

- Wrong Data story e.g. defaulting to SQL Server, rather than investigating other data options

- Wrong Compute model e.g. Choosing a fixed price, always-on, slow scaling WebAPI for sites that have unpredictable and large bursts of traffic

- Security e.g. this word should be enough

- Load/Performance e.g. not getting the performance to $ spend ratio right

Finally, at the end of a project, you should go through a "Go-Live Audit". The Cloud Architect should review and sign off that the project is good to go. They mostly check the 3 horsemen (load, security, and cost).

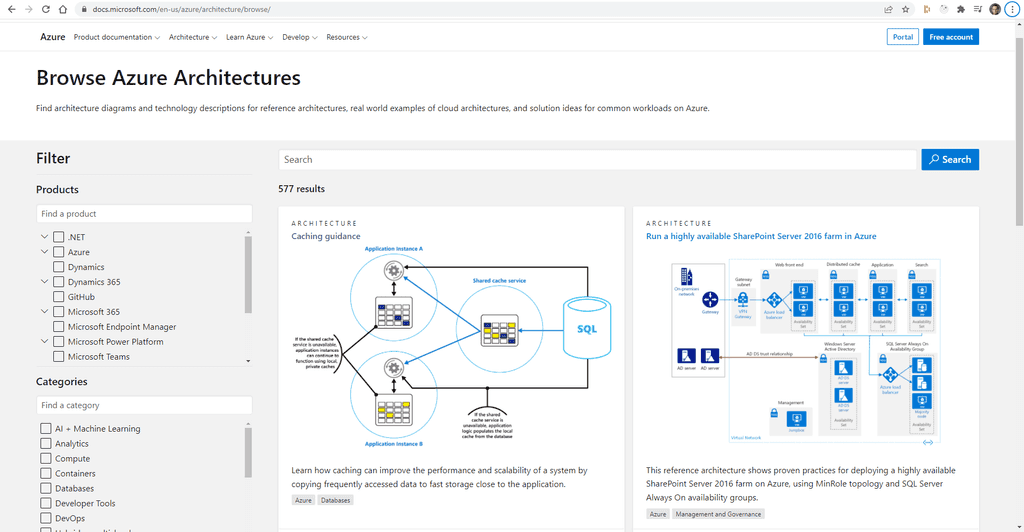

In a Specification Review you should include an architecture diagram so the client has a visual idea of the plan. There are lot of tools to help build out an architecture diagram, but the best one is Azure Architecture Center

It is a one stop shop for all things Azure Architecture. It’s got a library of reference implementations to get you started. Lots of information on best practices from the big decisions you need to make down to the little details that can make a huge difference to how your application behaves.

Video: Discovering the Azure Architecture Center | Azure Tips and Tricks (2 mins)

Reference Architectures

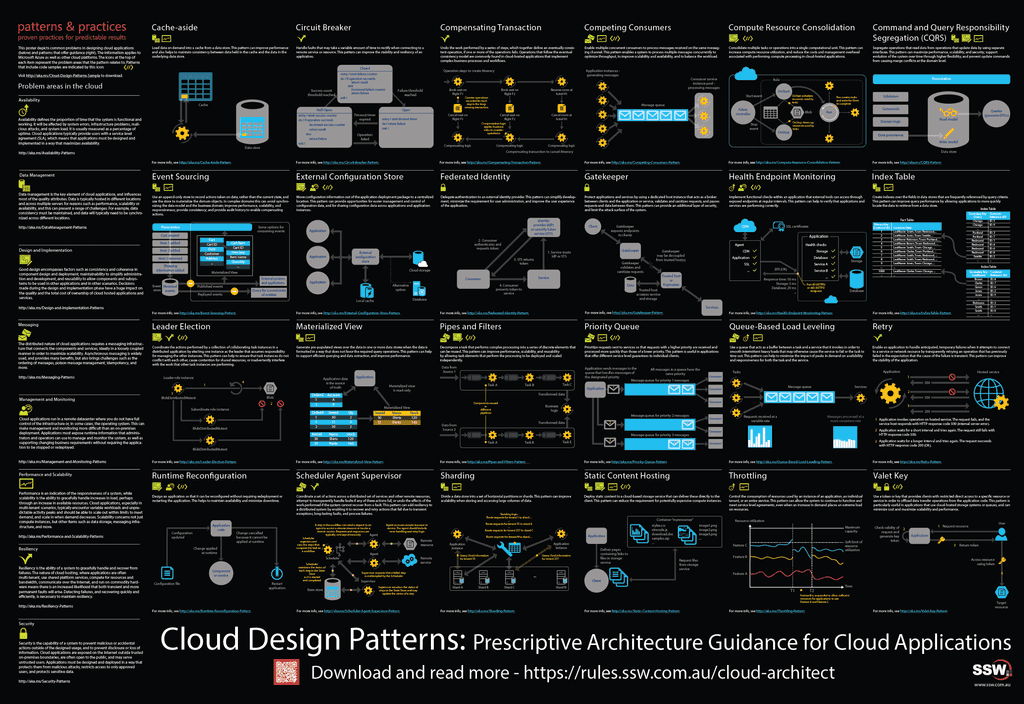

Figure: Use Browse Architectures to find a reference architecture that matches your application The architectures presented fit into 2 broad categories:

- Complete end to end architectures. These architectures cover the full deployment of an application.

- Architectures of a particular feature. These architectures explain how to incorporate a particular element into your architecture. The Caching example above explains how you might add caching into your application to improve performance.

Each architecture comes with comprehensive documentation providing all the information you need to build and deploy the solution.

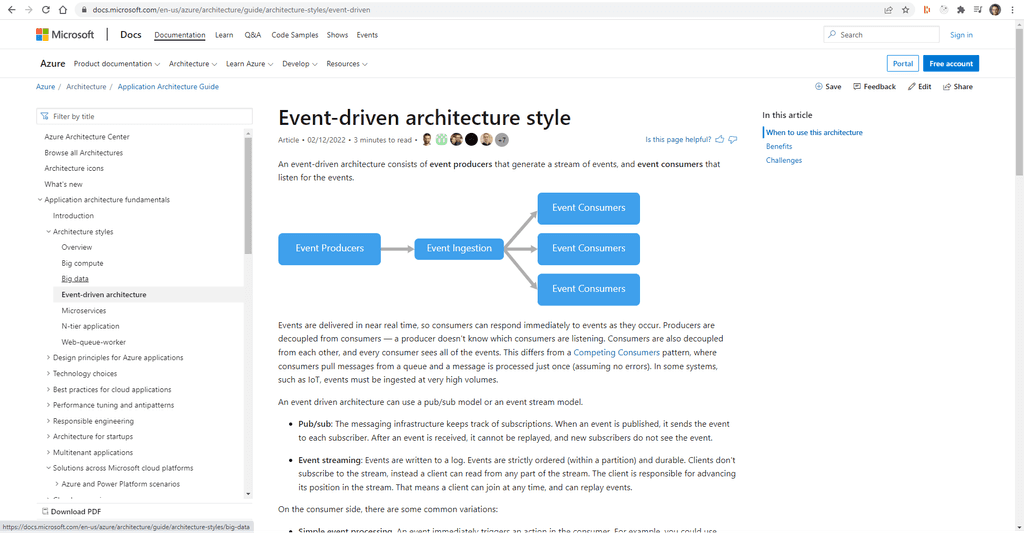

Best Practices

Figure: Use Explore Best Practices to find information on particular best practice The Best Practices is a very broad set of documentation from things like performance tuning all the way through to designing for resiliency and some of the more common types of applications and their requirements. Because of this there is almost always something useful, no matter what stage your application is at. Many teams will add a Sprint goal of looking at one best practise per Sprint or at regular intervals. The Product Owner would then help prioritise which areas should be focussed on first.

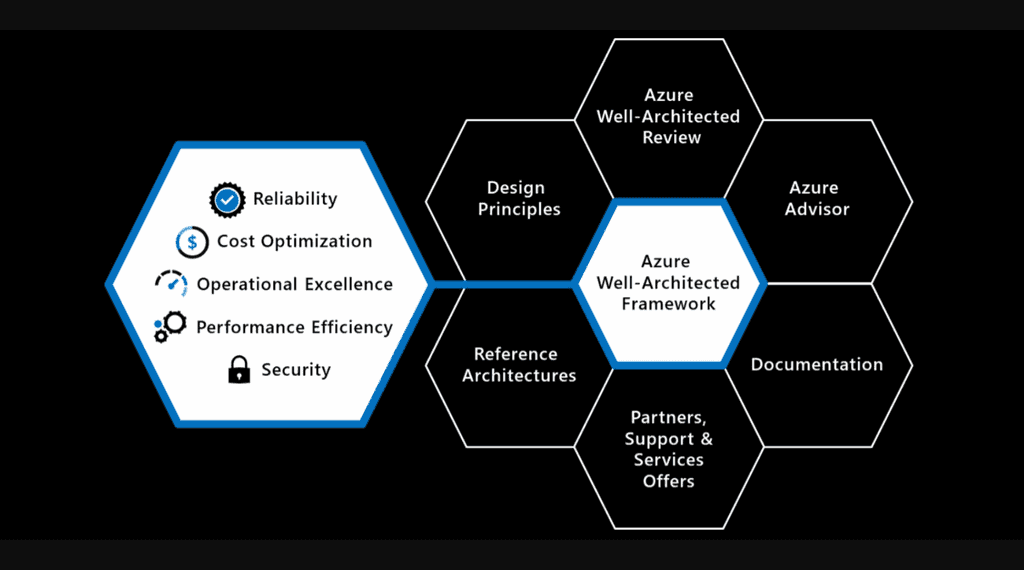

The Well-Architected Framework is a set of best practices which form a repeatable process for designing solution architecture, to help identify potential issues and optimize workloads.

Figure: The Well-Architected Framework includes the five pillars of architectural excellence. Surrounding the Well-Architected Framework are six supporting elements The 5 Pillars

- Reliability – Handling and recovering from failures https://docs.microsoft.com/en-us/azure/architecture/framework/resiliency/principles

- Cost Optimization – Minimizing costs without impacting workload performance https://docs.microsoft.com/en-us/azure/architecture/framework/cost/principles

- Performance Efficiency (Scalability) – Testing, monitoring and adapting to changes in load e.g. new product launch, Black Friday sale, etc. https://docs.microsoft.com/en-us/azure/architecture/framework/scalability/principles

- Security – Protecting from threats and bad actors https://docs.microsoft.com/en-us/azure/architecture/framework/security/security-principles

- Operational Excellence (DevOps) – Deploying and managing workloads once deployed https://docs.microsoft.com/en-us/azure/architecture/framework/devops/principles

Trade-offs

There are trade-offs to be made between these pillars. E.g. improving reliability by adding Azure regions and backup points will increase the cost.

Why use it?

Thinking about architecting workloads can be hard – you need to think about many different issues and trade-offs, with varying contexts between them. WAF gives you a consistent process for approaching this to make sure nothing gets missed and all the variables are considered.

Just like Agile, this is intended to be applied for continuous improvement throughout development and not just an initial step when starting a new project. It is less about architecting the perfect workload and more about maintaining a well-architected state and an understanding of optimizations that could be implemented.

What to do next?

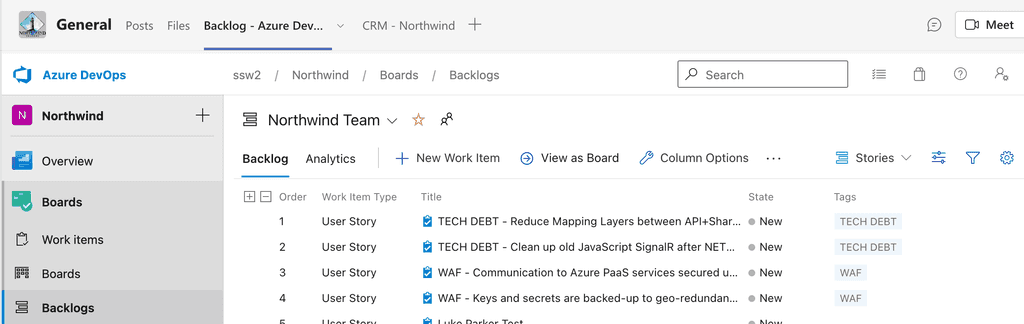

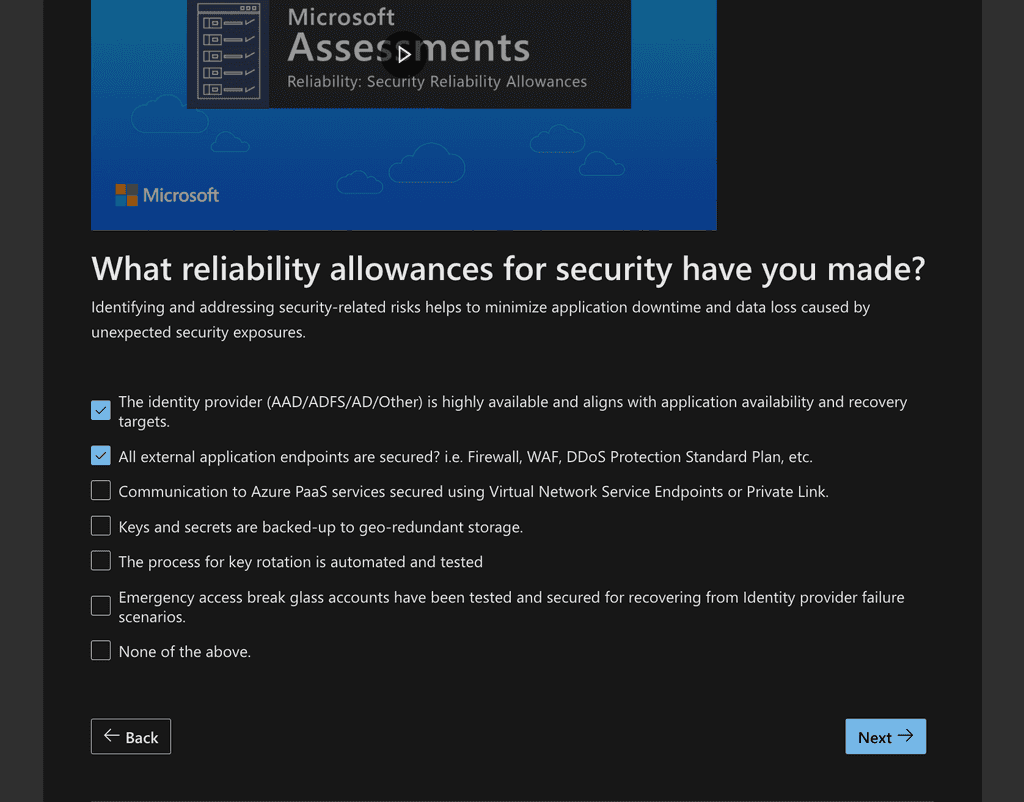

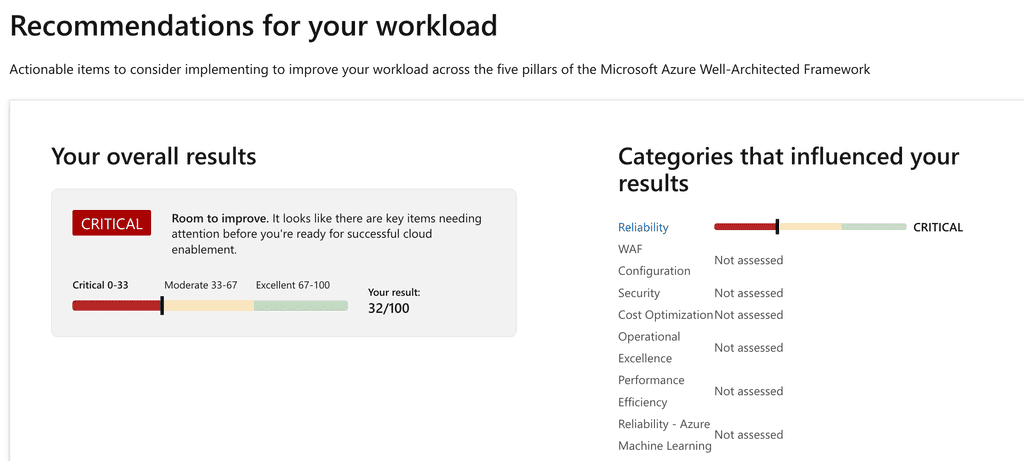

Assess your workload against the 5 Pillars of WAF with the Microsoft Azure Well-Architected Review and add any recommendations from the assessment results to your backlog.

Figure: Some recommendations will be checked, others go to the backlog so the Product Owner can prioritize

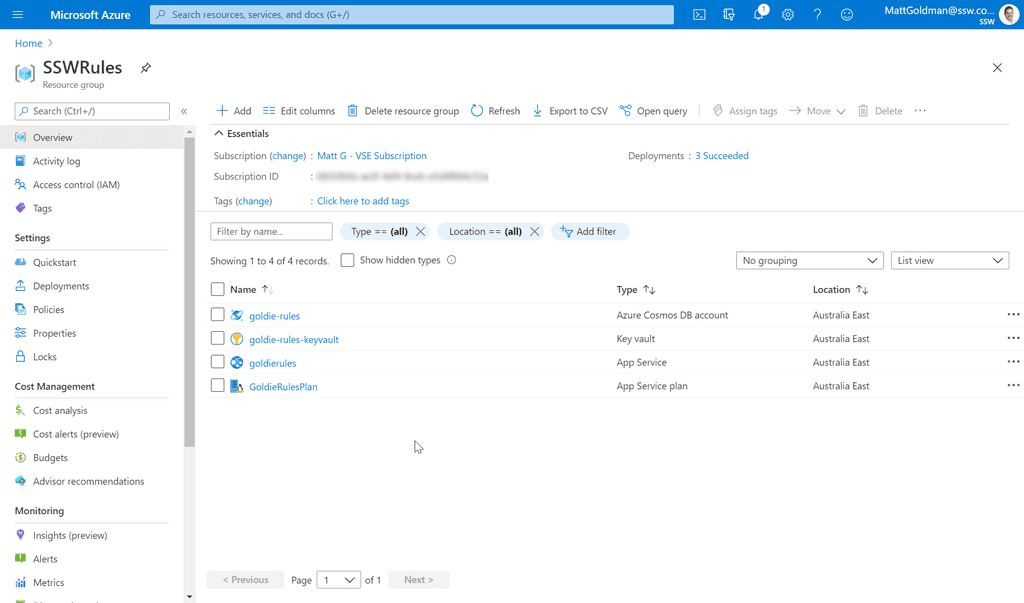

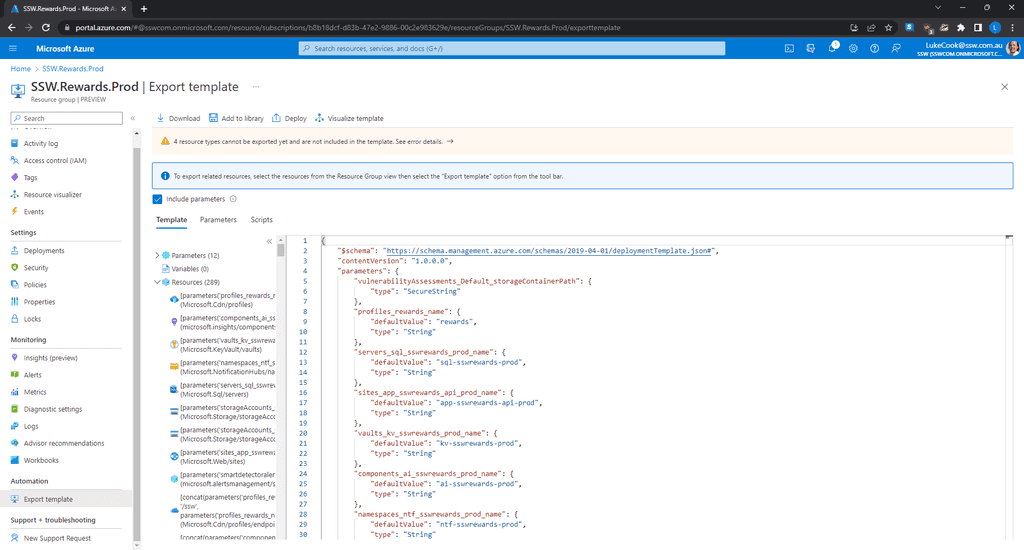

Figure: Recommended actions results show things to be improved Looking at a long list of Azure resources is not the best way to be introduced to a new project. It is much better to visualize your resources.

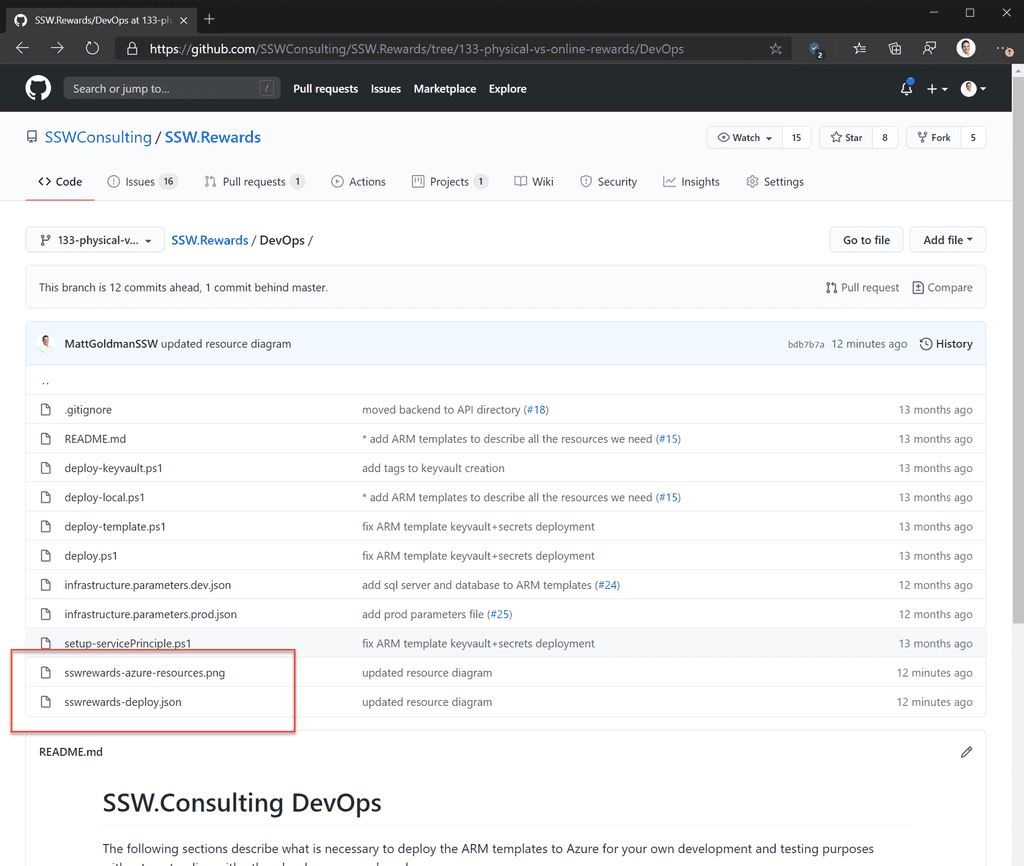

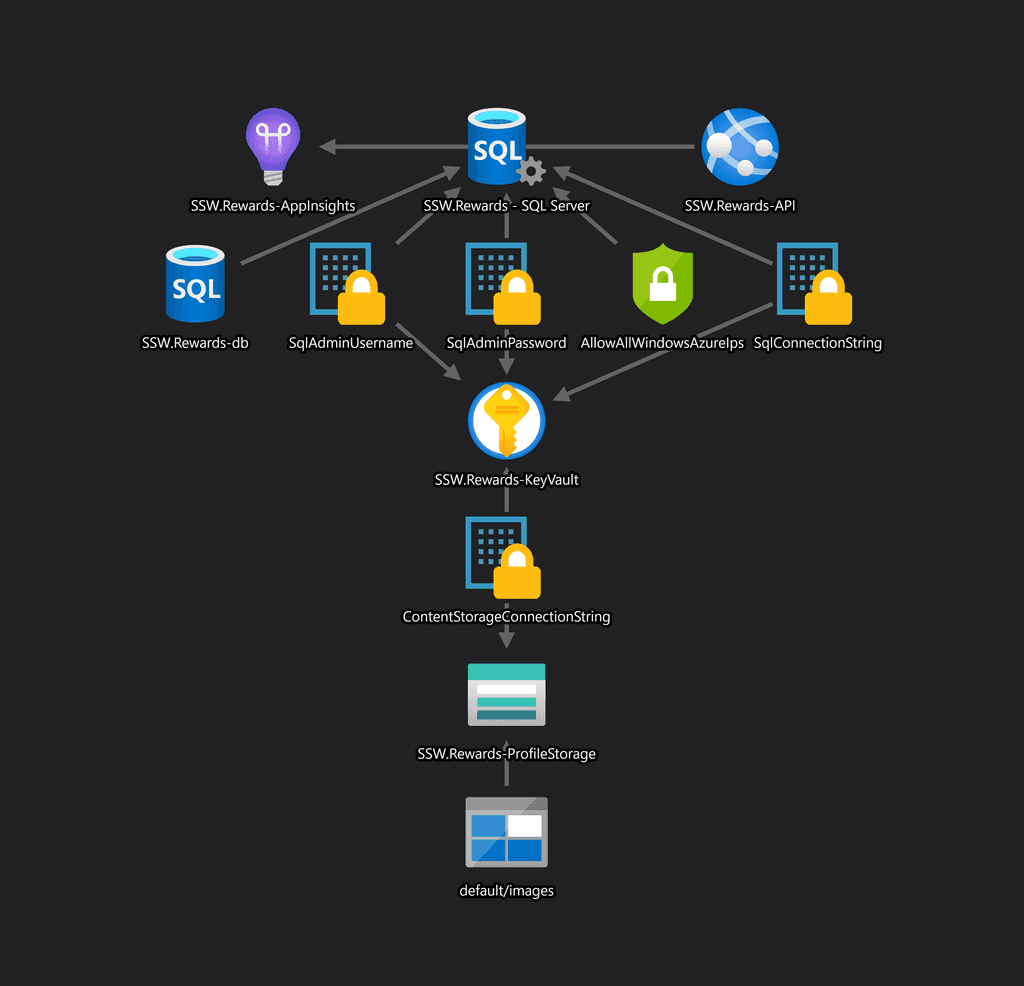

You need an architecture diagram, but this is often high level, just outlining the most critical components from the 50,000ft view, often abstracted into logical functions or groups. So, once you have your architecture diagram, the next step is to create your Azure resources diagram.

Video: Azure resource diagrams in Visual Studio Code - Check out this awesome extension! (6 min)Option A: Just viewing a list of resources in the Azure portal

Note: When there are a lot of resources this doesn't work.

Option B: Visually viewing the resources

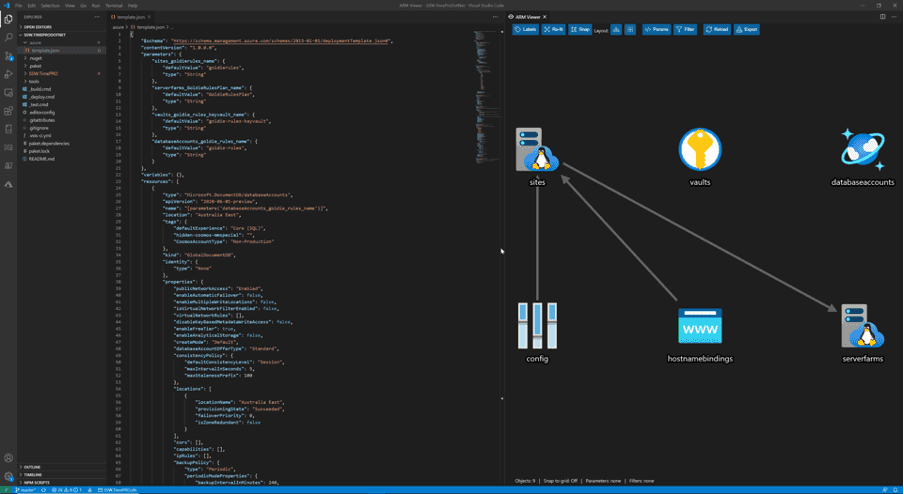

Figure: Good Example - ARM template and automatically generated Azure resources diagram in the SSW Rewards repository on GitHub

Figure: Good Example - The Azure resources diagram generated by the ARM Template Viewer extension for SSW Rewards Install ARM Template Viewer from VisualStudio Marketplace.

Note: Microsoft has a download link for all the Azure icons as SVGs.

Suggestion to Microsoft: Add an auto-generated diagram in the Azure portal. Have an option in the combo box (in addition to List View) for Diagram View.

Update: This is now happening.

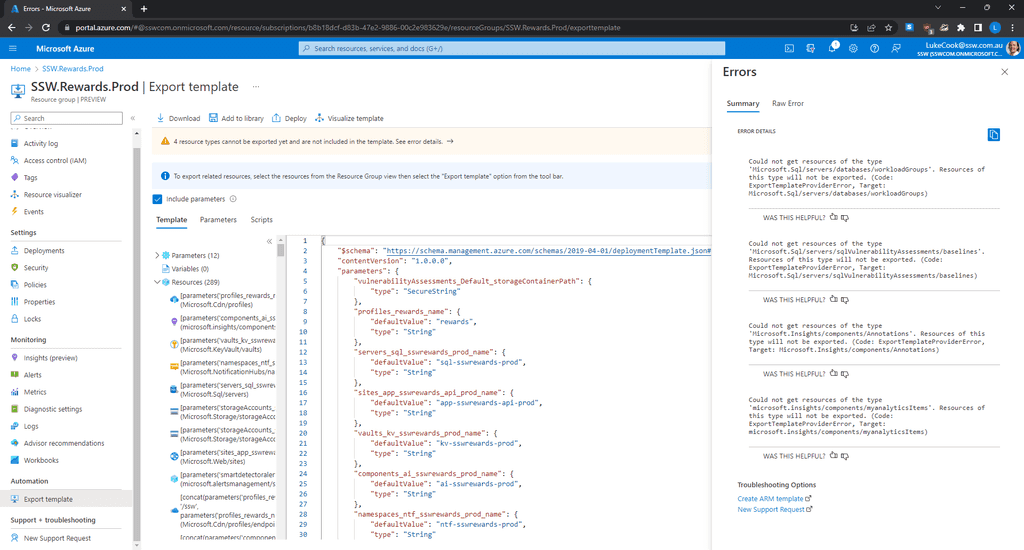

BIG NEWS! My ARM visualiser is now a native part of the Azure portal 😁 The engineering team did most of the hard work, but I'm chuffed to bits. It's currently in the special RC portal, please try it out (in the 'export template' view) https://t.co/iEvAhxxGRK & provide feedback! pic.twitter.com/4KTu7GGOeQ

— Ben Coleman (@BenCodeGeek) April 9, 2020Scrum Warning: Like the architecture diagram, this is technical debt as it needs to be kept up to date each Sprint. However, unlike the architecture diagram, this one is much easier to maintain as it can be refreshed with a click. You could reduce this technical debt by automatically saving the .png to the same folder as your architecture diagram. It is easy to do this by using Azure Event Grid and Azure Functions to generate these for you when you make changes to your resources.

Building cloud-native applications can be challenging due to their complexity and the need for scalability, resilience, and manageability.There are lots of ways to build cloud-native applications and the overwhelming number of choices can make it difficult to know where to start.

Figure: Too many lego pieces - Where do you start? Also, the complexity of modern cloud-native applications can make them difficult to manage and maintain.

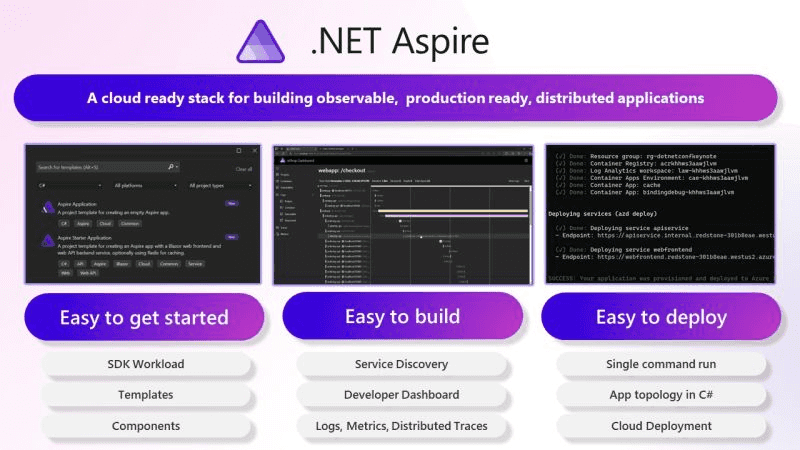

.NET Aspire is a powerful set of tools, templates, and integrations designed to streamline cloud-native app development with .NET. It offers a consistent approach to orchestration, standardized integrations, and developer-friendly tooling to help you build robust, production-ready applications.

Video: Cloud Native Aspirations with .NET Aspire | Matt Wicks & Rob Pearson | SSW User Groups (1:28 hr)

Figure: Aspire makes common time-consuming developer chores a breeze. .NET Aspire addresses common pain points in cloud-native development:

Orchestration

.NET Aspire helps you manage interconnected services and resources in your application by:

- Automatically setting up service discovery and environment variables.

- Providing tools to spin up local containers and configure dependencies.

- Simplifying complex setups with clear abstractions, reducing the need for low-level configuration.

Integrations

.NET Aspire integrations make it easy to connect to essential services:

- NuGet packages like

AddRedisorAddAzureServiceBusClientstreamline configuration and health checks. - Standardized interfaces reduce boilerplate code and ensure seamless connectivity.

Tooling and Templates

Leverage predefined templates and tooling to:

- Generate projects with common configurations like health checks and telemetry.

- Save time with opinionated defaults for service discovery, logging, and monitoring.

- Kickstart new projects or integrate Aspire into existing .NET apps.

Example - Adding Redis Cache to a .NET Core app

The old way - with Docker Compose

- Manually set up a Redis container (e.g., using Docker Compose).

version: '3.9' services: redis: image: redis:latest container_name: redis-cache ports: - "6379:6379" environment: REDIS_PASSWORD: examplepassword # Optional, for enabling authentication command: ["redis-server", "--requirepass", "examplepassword"] # Optional, for setting up a password volumes: - redis_data:/data # Persist data locally volumes: redis_data: driver: local- Write custom code to handle connection strings and inject them into your application (this needs to be manually kept in sync with all your environments)

var redisConnection = Environment.GetEnvironmentVariable("REDIS_CONNECTION_STRING") ?? "localhost:6379"; services.AddStackExchangeRedisCache(options => { options.Configuration = redisConnection; options.InstanceName = "SampleInstance"; });Figure: Bad Example - Manually setting up Redis cache 🥱

The new way - with .NET Aspire

Aspire handles Redis setup and connection string injection for you:

- Configure your Aspire application and pass a reference to Redis Cache

var builder = DistributedApplication.CreateBuilder(args); var cache = builder.AddRedis("cache"); builder.AddProject<Projects.MyApp>("app") .WithReference(cache) .WaitFor(cache);- Add the client integration for Redis

public static class DependencyInjection { public static void AddInfrastructure(this IHostApplicationBuilder builder) { builder.AddRedisClient("my-redis-connection-string"); } }Figure: Good Example - Simple Redis setup with .NET Aspire 🚀

No need to write Docker Compose files.No need for yaml 🤮.Connection string is automatically injected.

Get Started with Aspire

Check out Microsoft's docs

https://learn.microsoft.com/en-us/dotnet/aspire/get-started/aspire-overviewYou can test out Aspire by running the SSW.CleanArchitecture template - Let us know what you think!

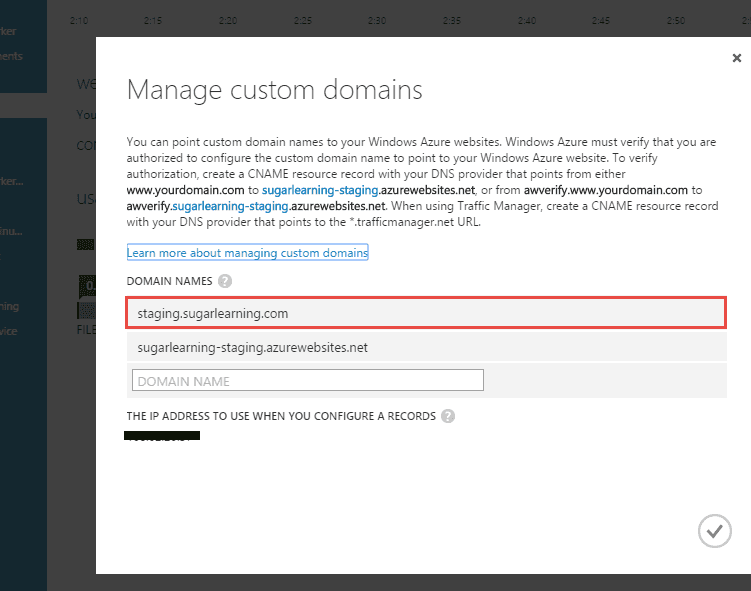

https://github.com/SSWConsulting/SSW.CleanArchitecture?tab=readme-ov-file#installing-the-templateIf you use the default Azure staging website URL, it can be difficult to remember and a waste of time trying to lookup the name every time you access it. Follow this rule to increase your productivity and make it easier for everyone to access your staging site.

Default Azure URL: sugarlearning-staging.azurewebsites.net

Figure: Bad example - Site using the default URL (hard to remember!!)

Customized URL: staging.sugarlearning.com

Figure: Good example - Staging URL with "staging." prefix

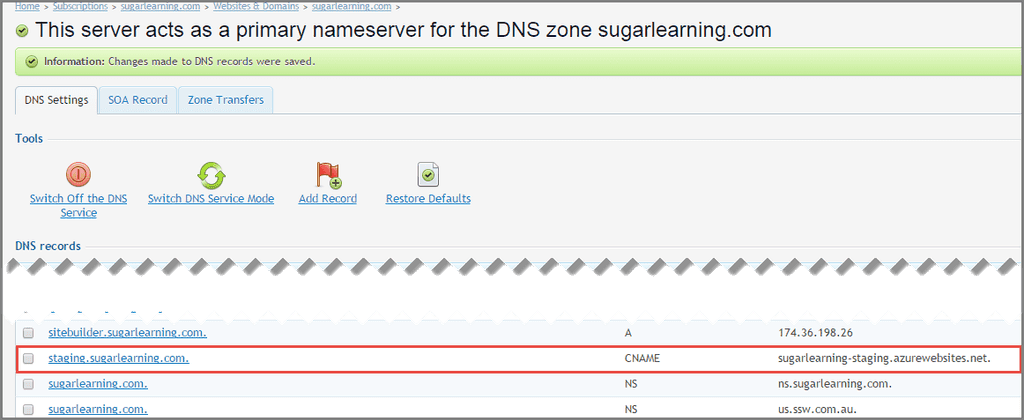

How to setup a custom URL

- Add a CName to the default URL to your DNS server

Figure: CName being added to DNS for the default URL - Instruct Azure to accept the custom URL

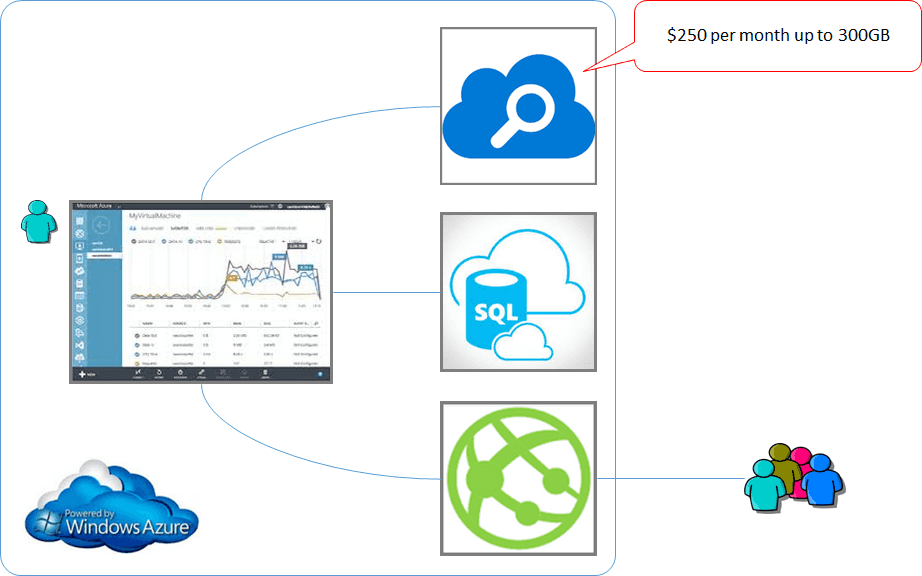

Figure: Azure being configured to accept the CName AzureSearch is designed to work with Azure based data and runs on ElasticSearch. It doesn't expose all the advanced search features. You may resist to choose it as your search engine from the missing features and what seems to be an expensive monthly fee ($250 as of today). If this is the case, follow this rule:

Consider AzureSearch if your website:

- Uses SQL Azure (or other Azure based data such as DocumentDB), and

- Does not require advanced search features

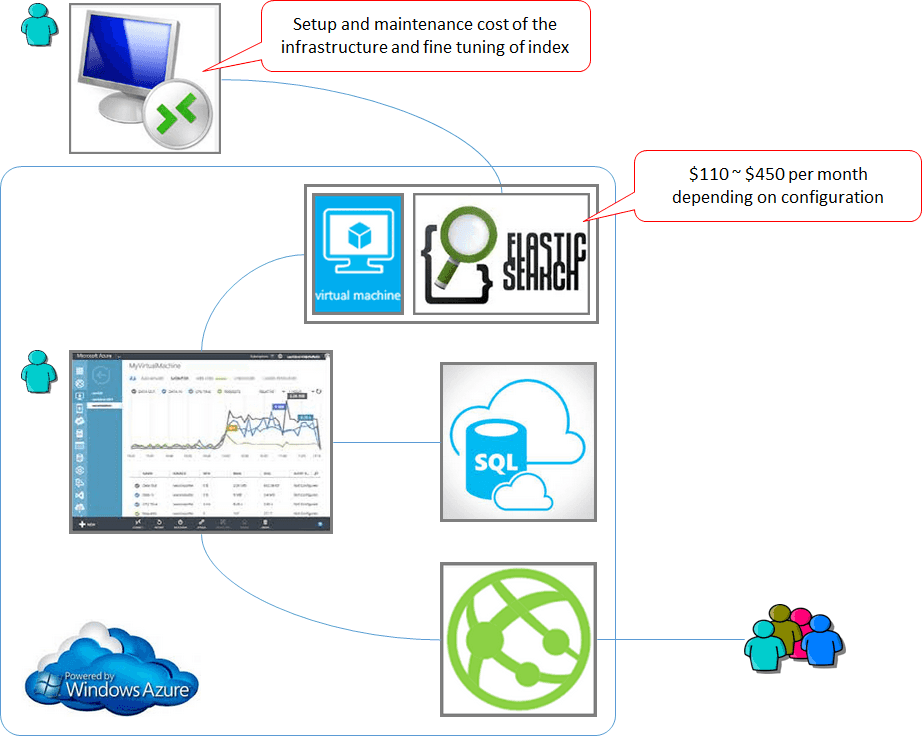

Consider ElasticSearch if your website:

- Requires advance search features that aren't supported by AzureSearch

Keep in mind that:

- Hosting of a full-text search service costs you labour to set up and maintain the infrastructure, and

- A single Azure VM can cost you up to $450. So do not drop AzureSearch option unless the missing features are absolutely necessary for your site

We've been down this road before where developers had to be taught not to manually create databases and tables. Now, in the cloud world, we're saying the same thing again: Don't manually create Azure resources.

Manually Creating Resources

This is the most common and the worst. This is bad because it requires manual effort to reproduce and leaves margin for human error. Manually provisioning resources can also lead to configuration drift, which is to say that over time it can be difficult to keep track of which deployment configurations were made and why.

- Create resources in Azure and not save a script

Figure (animated gif): Bad example - Creating resources manually Manually creating and saving the script

Some people half solve the problem by manually creating and saving the script. This is also bad because it’s like eating ice cream and brushing your teeth – it doesn’t solve the health problem.

Figure: Warning - The templates are crazy verbose. They often don't work and need to be manually tweaked Tip: Save infrastructure scripts/templates in a folder called 'infra'.

So if you aren't manually creating your Azure resources, what options do you have?

Option A: Farmer

Farmer - Making repeatable Azure deployments easy!

- IaC using F# as a strongly typed DSL

- Generates ARM templates from F#

- Add a very short and readable F# project in your solution

- Tip: The F# solution of scripts should be in a folder called Azure

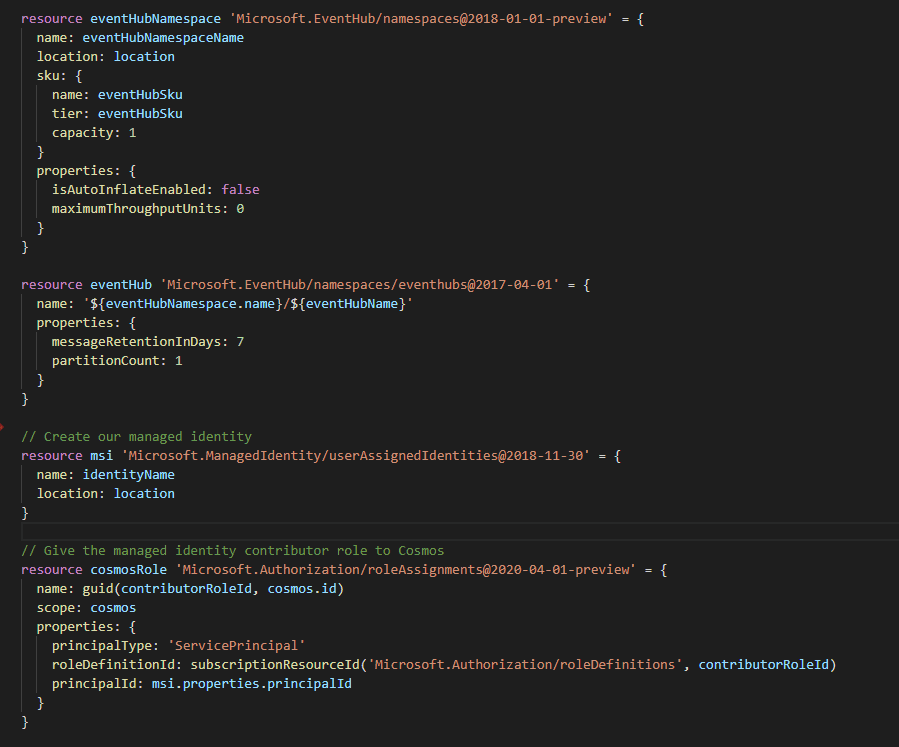

Option B: Bicep by Microsoft (recommended)

Bicep - a declarative language for describing and deploying Azure resources

- Is free and fully supported by Microsoft

- Has 'az' command line integration

- Awesome extension for VS Code to author ARM Bicep files ⭐️

- Under the covers - Compiles into an ARM JSON template for deployment

- Improves the repeatability of your deployment process, which can come in handy when you want to stage your deployment configuration

- Much simpler syntax than ARM JSON

- Handles resource dependencies automatically

- Private Module Registries for publishing versioned and reusable architectures

- No need for deploy scripts! There's an bicep-deploy GitHub Action from Microsoft to make it easy add deployments to your workflows

Tip: If you are assigning any role assignment using bicep, make sure it doesn't exist before. (Using Azure Portal)

Announcement info: Project Bicep – Next Generation ARM Templates

Example Bicep files: Fullstack Webapp made with Bicep

Figure: Good example - Author your own Bicep templates in Visual Studio Code using the Bicep Extension Option C: Enterprise configuration management $$$

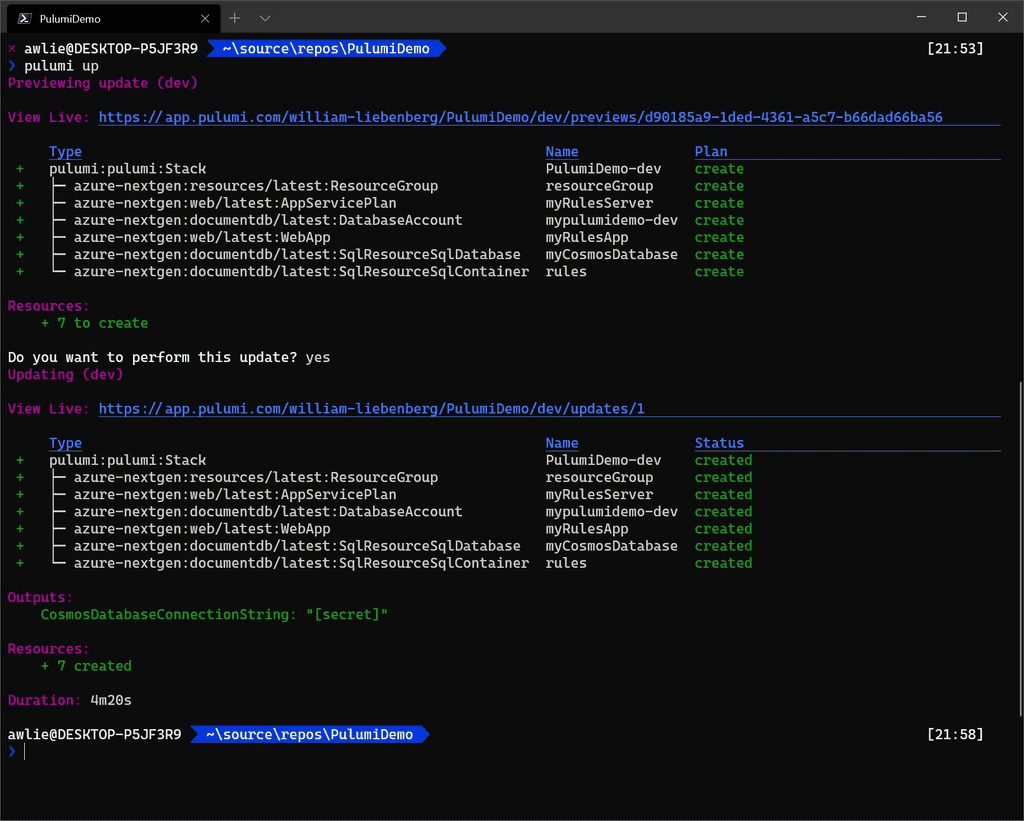

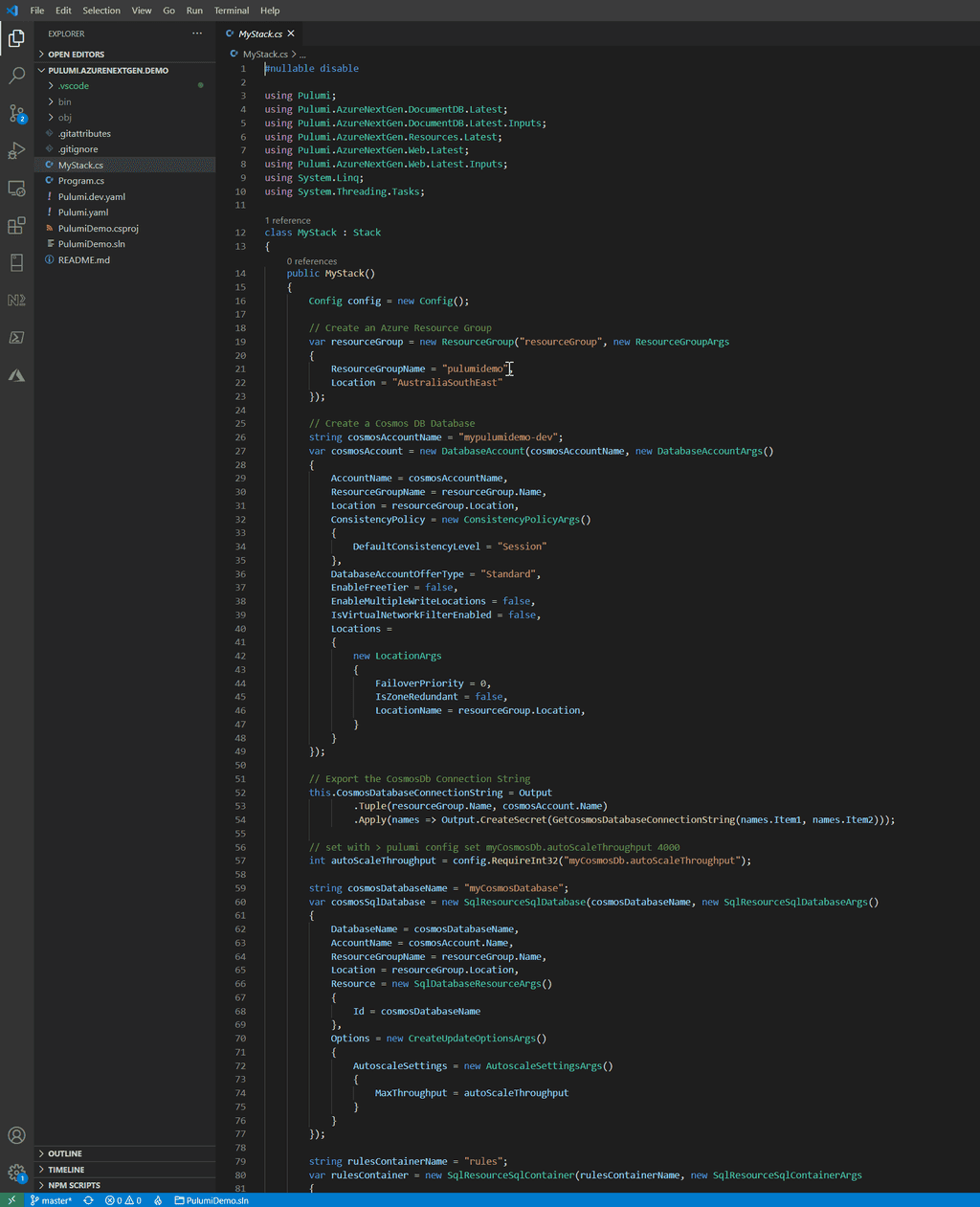

The other option when moving to an automated Infrastructure as Code (IaC) solution is to move to a paid provider like Pulumi or Terraform. These solutions are ideal if you are using multiple cloud providers or if you want to control the software installation as well as the infrastructure.

- Both tools are great and have free tiers available

- Paid tiers provide more benefits for larger teams and helps manage larger infrastructure solutions

-

Terraform uses HashiCorp Configuration Language HCL

- like YAML but much more powerful

- https://learn.hashicorp.com/tutorials/terraform/cdktf-install?in=terraform/cdktf

- Pulumi uses real code (C#, TypeScript, Go, and Python) as infrastructure rather than JSON/YAML

Figure: Good example - Code from the Pulumi Azure NextGen provider demo with Azure resources defined in C# Tip: After you’ve made your changes, don’t forget to visualize your new resources.

User-defined data types in Bicep allow you to create custom data structures for better code organization and type safety. They enhance reusability, abstraction, and maintainability within projects.

When creating a cloud resource, numerous parameters are typically required for configuration and customization. Organizing and naming these parameters effectively is increasingly important.

@allowed(['Basic', 'Standard']) param skuName string = 'Basic' @allowed([5, 10, 20, 50, 100]) param skuCapacity int = 5 param skuSizeInGB int = 2Bad example - Relying on parameter prefixes and order leads to unclear code, high complexity, and increased maintenance effort

param sku objectBad example - When declaring a parameter as an untyped object, bicep cannot validate the object's properties and values at compile time, risking runtime errors.

// User-defined data type type skuConfig = { name: 'Basic' | 'Standard' capacity: 5 | 10 | 20 | 50 | 100 sizeInGB: int } param sku skuConfig = { name: 'Basic' capacity: 5 sizeInGB: 2 }Good example - User-defined data type provides type safety, enhanced readability and making maintenance easier

Video: Hear from Luke Cook about how organizing your cloud assets starts with good names and consistency!

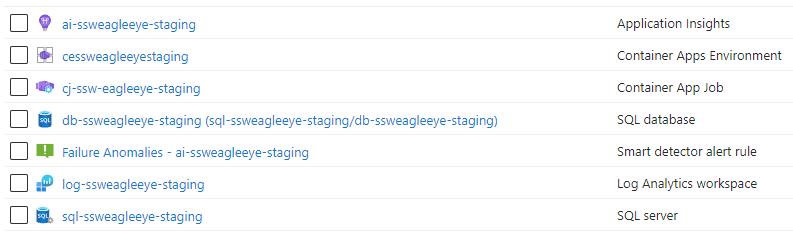

Organizing your cloud assets starts with good names. It is best to be consistent and use:

- All lower case

- Use kebab case (“-“ as a separator)

- Include a resource type abbreviation (so it's easy to find the resource in a script)

- Include which environment the resource is intended for i.e. dev, test, prod, etc.

- If applicable, include the intended use of the resource in the name e.g. an app service may have a suffix api

Azure defines some best practices for naming and tagging your resource.

Having inconsistent resource names across projects creates all sorts of pain

- Developers will struggle to find a project's resources and identify what those resources are being used for

- Developers won't know what to call new resources they need to create.

- You run the risk of creating duplicate resources... created because a developer has no idea that another developer created the same thing 6 months ago, under a different name, in a different Resource Group!

Keep your resources consistent

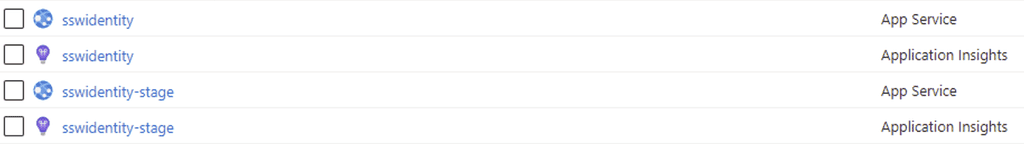

If you're looking for resources, it's much easier to have a pattern to search for. At a bare minimum, you should keep the name of the product in the resource name, so finding them in Azure is easy. One good option is to follow the "productname-environment" naming convention, and most importantly: keep it consistent!

Name your resources according to their environment

Resource names can impact things like resource addresses/URLs. It's always a good idea to name your resources according to their environment, even when they exist in different Subscriptions/Resource Groups.

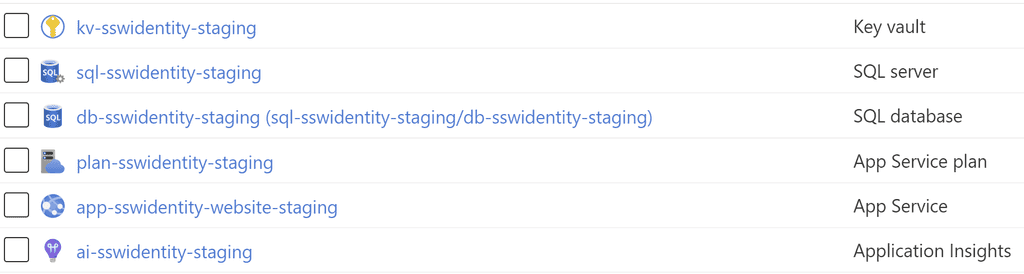

Good Example - Consistent names, using lowercase letters and specifying the environment. Easy to find, and easy to manage! Plan for the exceptions

Some resources won't play nicely with your chosen naming convention (for instance, storage accounts do not accept kebab-case). Acknowledge these, and have a rule in place for how you will name these specific resources.

Automate resource deployment

ClickOps can save your bacon when you quickly need to create a resource and need to GSD. Since we are all human and humans make mistakes, there will be times when someone is creating resources via ClickOps are unable to maintain the team standards to consistent name their resources.

Instead, it is better to provision your Azure Resources programmatically via Infrastructure as Code (IaC) using tools such as ARM, Bicep, Terraform and Pulumi. With IaC you can have naming conventions baked into the code and remove the thinking required when creating multiple resources. As a bonus, you can track any changes in your standards over time since (hopefully) your code is checked into a source control system such as Git (or GitHub, Azure Repos, etc.).

You can also use policies to enforce naming convention adherance, and making this part of your pipeline ensures robust naming conventions that remove developer confusion and lower cognitive load.

For more information, see our rule: Do you know how to create Azure resources?

Want more Azure tips? Check out our rule on Azure Resource Groups.

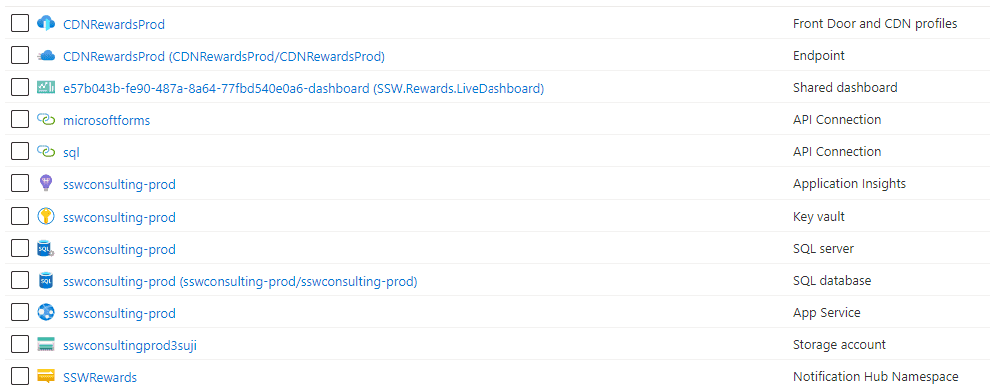

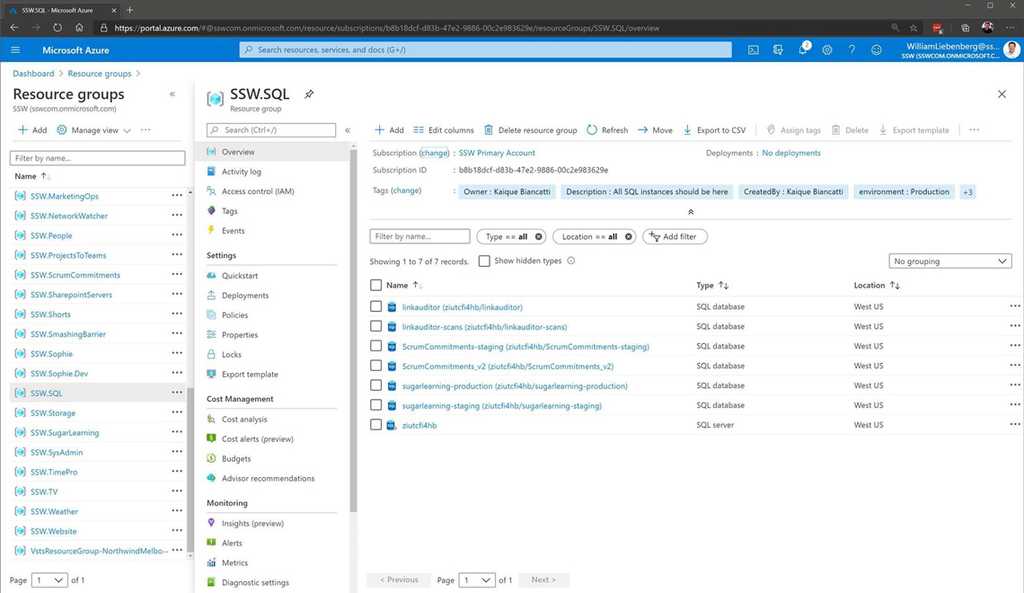

Naming your Resource Groups

Resource Groups should be logical containers for your products. They should be a one-stop shop where a developer or sysadmin can see all resources being used for a given product, within a given environment (dev/test/prod). Keep your Resource Group names consistent across your business, and have them identify exactly what's contained within them.

Name your Resource Groups as Product.Environment. For example:

- Northwind.Dev

- Northwind.Staging

- Northwind.Production

There are no cost benefits in consolidating Resource Groups, so use them! Have a Resource Group per product, per environment. And most importantly, be consistent in your naming convention.

Remember it's difficult to change a resource group name once everything is deployed without downtime.

Keep your resources in logical, consistent locations

You should keep all a product's resources within the same Resource Group. Your developers can then find all associated resources quickly and easily, and helps minimize the risk of duplicate resources being created. It should be clear what resources are being used in the Dev environment vs. the Production environment, and Resource Groups are the best way to manage this.

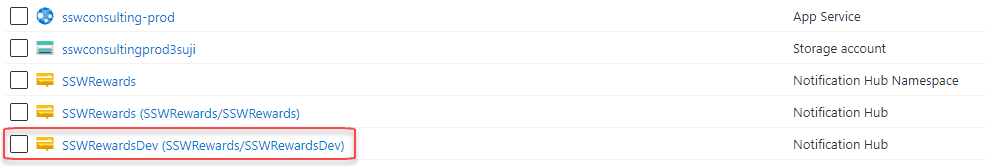

Don't mix environments

There's nothing worse than opening up a Resource Group and finding several instances of the same resources, with no idea what resources are in dev/staging/production. Similarly, if you find a single instance of a Notification Hub, how do you know if it's being built in the test environment, or a legacy resource needed in production?

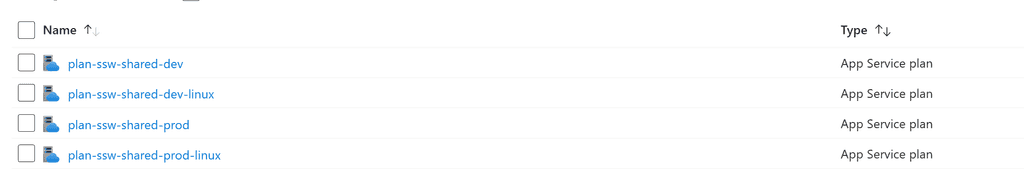

Don't categorize Resource Groups based on resource type

There is no inherent cost-saving benefit to grouping resources of the same type together unless they share underlying infrastructure. For example, consolidating all databases into a single SQL Server can reduce costs, as can hosting multiple apps under a single App Service Plan. However, arbitrarily placing all resources of the same type in one location—without considering dependencies—does not save money. Instead, it is best to provision resources in the same resource group as the applications that use them for better management and alignment with their lifecycle.

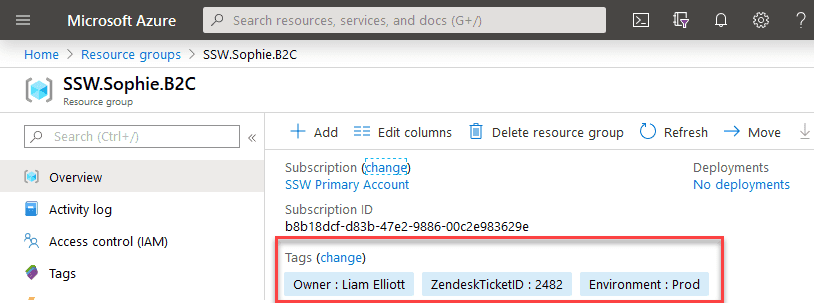

To help maintain order and control in your Azure environment, applying tags to resources and resources groups is the way to go.

Azure has the Tag feature, which allows you to apply different Tag Names and values to Resources and Resource Groups:

Figure: Little example of Tags in Resource Groups You can leverage this feature to organize your resources in a logical way, not relying in the names only. E.g.

- Owner tag: You can specify who owns that resource

- Environment tag: You can specify which environment that resource is in

Tip: Do not forget to have a strong naming convention document stating how those tags and resources should be named. You can use this Microsoft guide as a starter point: Recommended naming and tagging conventions.

Azure is Microsoft's Cloud service. However, you have to pay for every little bit of service that you use.

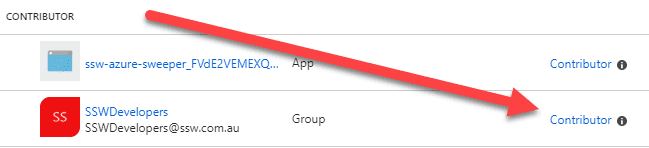

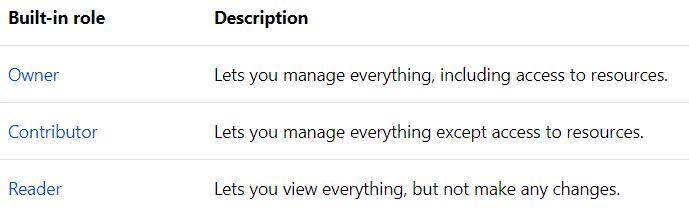

Before diving in, it is good to have an understanding of the basic built-in user roles:

Figure: Roles in Azure More info: https://docs.microsoft.com/en-us/azure/role-based-access-control/built-in-roles

It's not a good idea to give everyone 'Contributor' access to Azure resources in your company. The reason is cost: Contributors can add/modify the resources used, and therefore increase the cost of your Azure build at the end of the month. Although a single change might represent 'just a couple of dollars', in the end, everything summed up may increase the bill significantly.

The best practice is to have an Azure Spend Master. This person will control the level of access granted to users. Providing "Reader" access to users that do not need to/should not be making changes to Azure resources and then "Contributor" access to those users that will need to Add/Modify resources, bearing in mind the cost of every change.

Also, keep in mind that you should be giving access to security groups and not individual users. It is easier, simpler, and keeps things much better structured.

Always inform stakeholders in advance if you anticipate a significant increase in Azure resource costs. This proactive communication is crucial for budget planning and avoiding unexpected expenses.

Why This Matters

- Budget Management: Sudden spikes in costs can disrupt budget allocations and financial planning.

- Transparency: Keeping stakeholders informed fosters trust and transparency in operations.

- Planning: Advance notice allows for better resource allocation and potential cost optimization strategies.

How to Implement

- Communicate Early: As soon as a potential cost increase is identified, communicate this to relevant stakeholders.

- Provide Details: Include information about the cause of the spike, expected duration, and any steps being taken to mitigate costs.

Scenarios

A team needs to perform a bulk update on millions of records in an Azure Cosmos DB instance, a task that requires scaling up the throughput units substantially. They proceed without notifying anyone, assuming the cost would be minimal as usual. However, the intensive usage for a week leads to an unexpectedly high bill, causing budgetary concerns and dissatisfaction among stakeholders.

Figure: Bad example - Nobody likes a surprise bill

Before running a large-scale data migration on Azure SQL Database, which is expected to significantly increase DTU (Database Transaction Unit) consumption for a week, the team calculates the expected cost increase. They inform the finance and management teams, providing a detailed report on the reasons for the spike, the benefits of the migration, and potential cost-saving measures.

Then send an email (as per the template below)

Figure: Informing and emailing stakeholders before a spike makes everyone happy

Email template

To: {{ SpendMaster (aka SysAdmins) }} Subject: {{ PROJECT NAME }} - Cost spike due to data migration

Remember, effective communication about cost management is key to maintaining a healthy and transparent relationship with all stakeholders involved in your Azure projects.

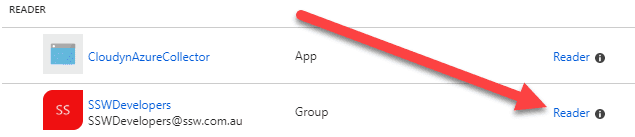

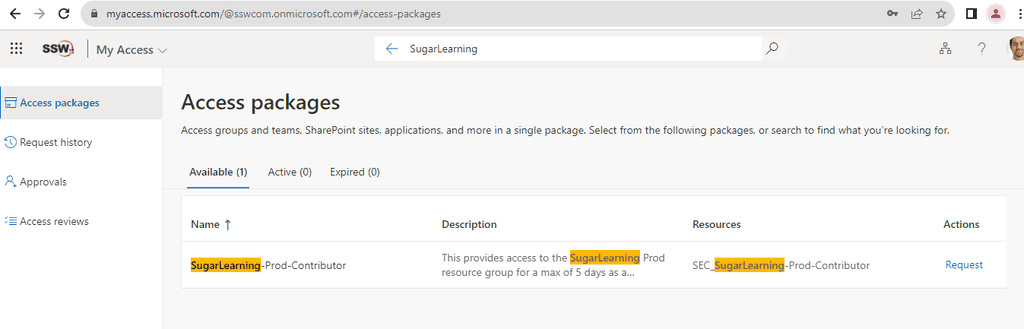

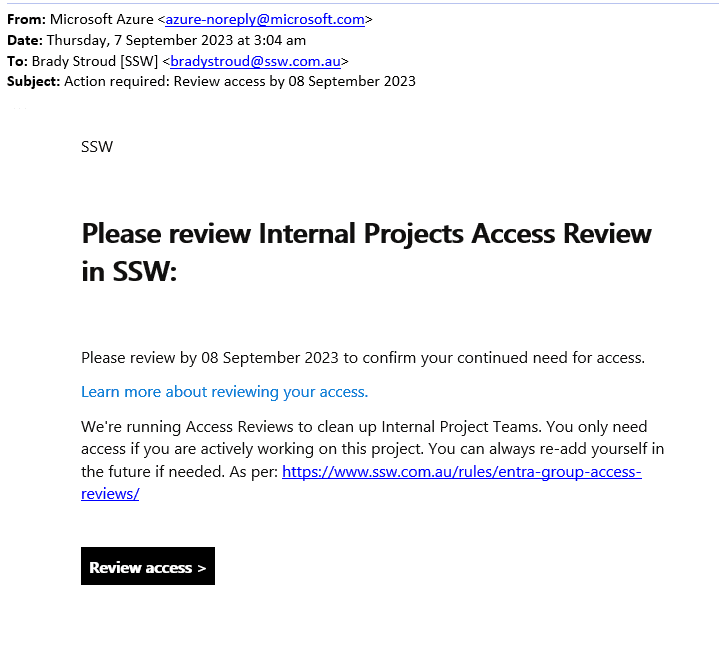

In today's complex digital landscape, managing user access to resources can be a daunting task for organizations. Entra Access Packages emerge as a game-changer in this scenario, offering a streamlined and efficient approach to identity and access management.

By bundling related resources into cohesive packages, they simplify the process of granting, reviewing, and revoking access. This not only reduces administrative overhead but also enhances security by ensuring that users have the right permissions at the right time. Furthermore, with built-in automation features like approval workflows and periodic access reviews, organizations can maintain a robust and compliant access governance structure. Adopting Azure Access Packages is a strategic move for businesses aiming to strike a balance between operational efficiency and stringent security.

❌ Bad Example - Manually Requesting Access via Email

In the old-fashioned way, users would send an email to the SysAdmins requesting access to a specific resource. This method is prone to errors, lacks an audit trail, and can lead to security vulnerabilities.

To: SysAdmins Cc: Subject: Request for Access to SugarLearning Prod Figure: Bad example - This requires manual changes by a SysAdmin

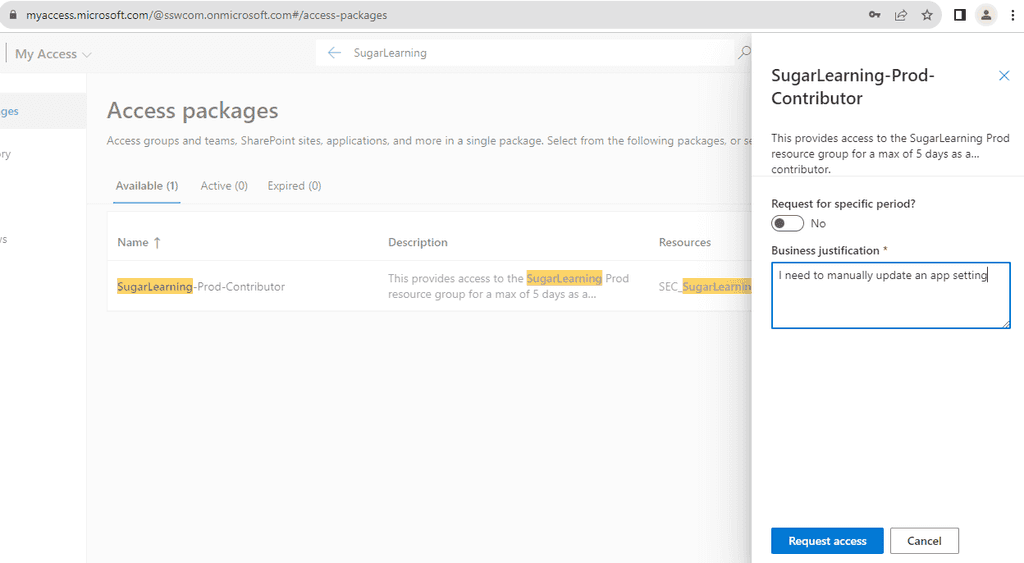

✅ Good Example - Requesting Access via myaccess.microsoft.com

Instead of manually sending emails, users can request access through

myaccess.microsoft.com, which provides a streamlined, auditable, and secure method.-

Navigate to

myaccess.microsoft.com -

Search for the desired resource or access package.

Figure: Search for the required resource -

Request Access by selecting the appropriate access package and filling out any necessary details.

Request Access -

Wait for approval from the people responsible for the resource

If you require immediate access ping them on Teams

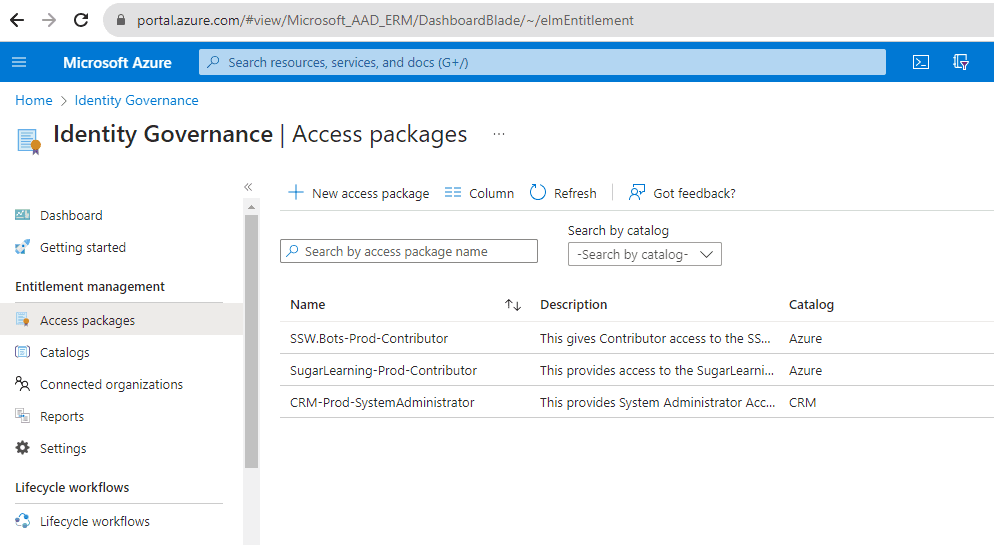

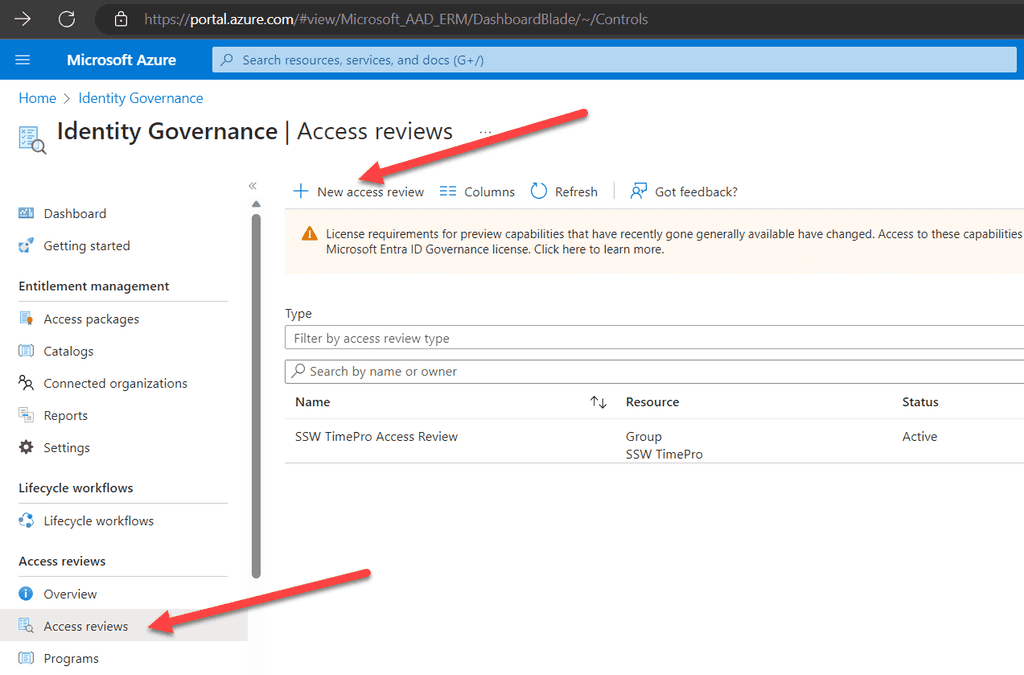

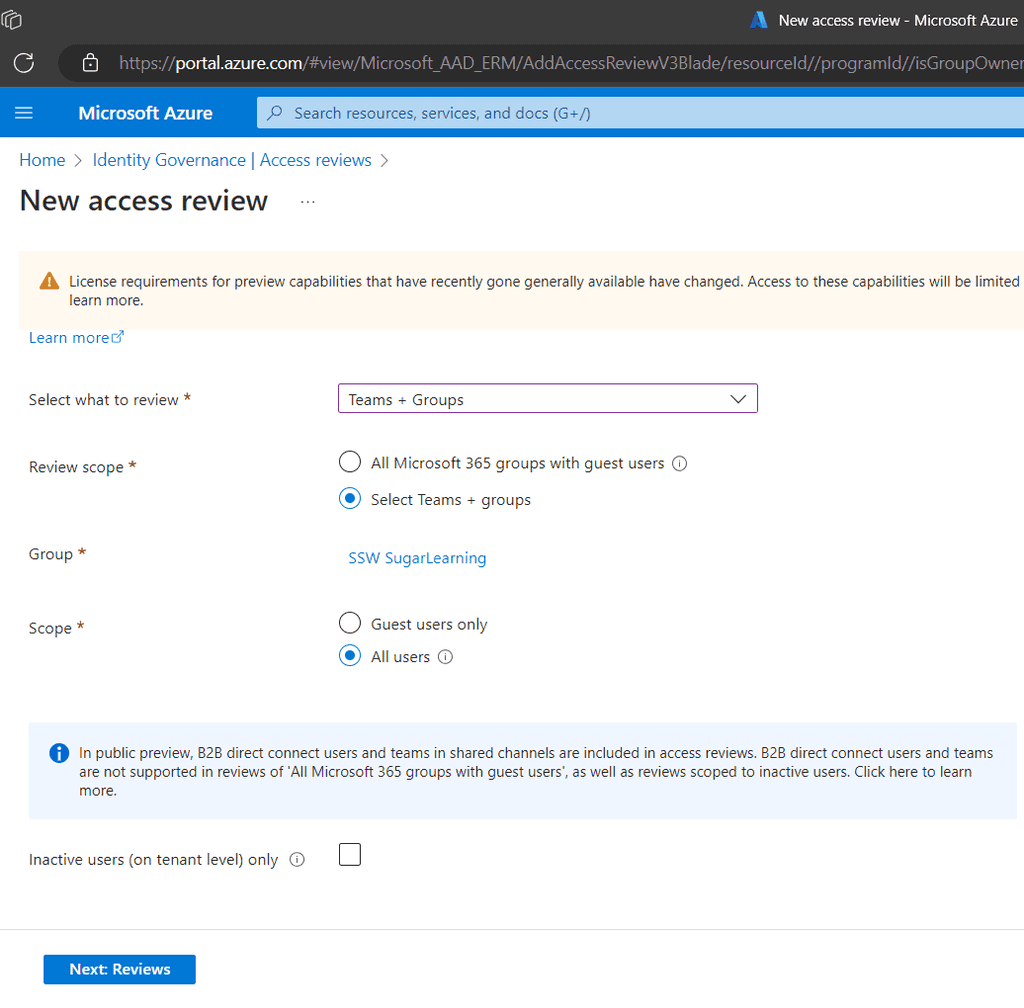

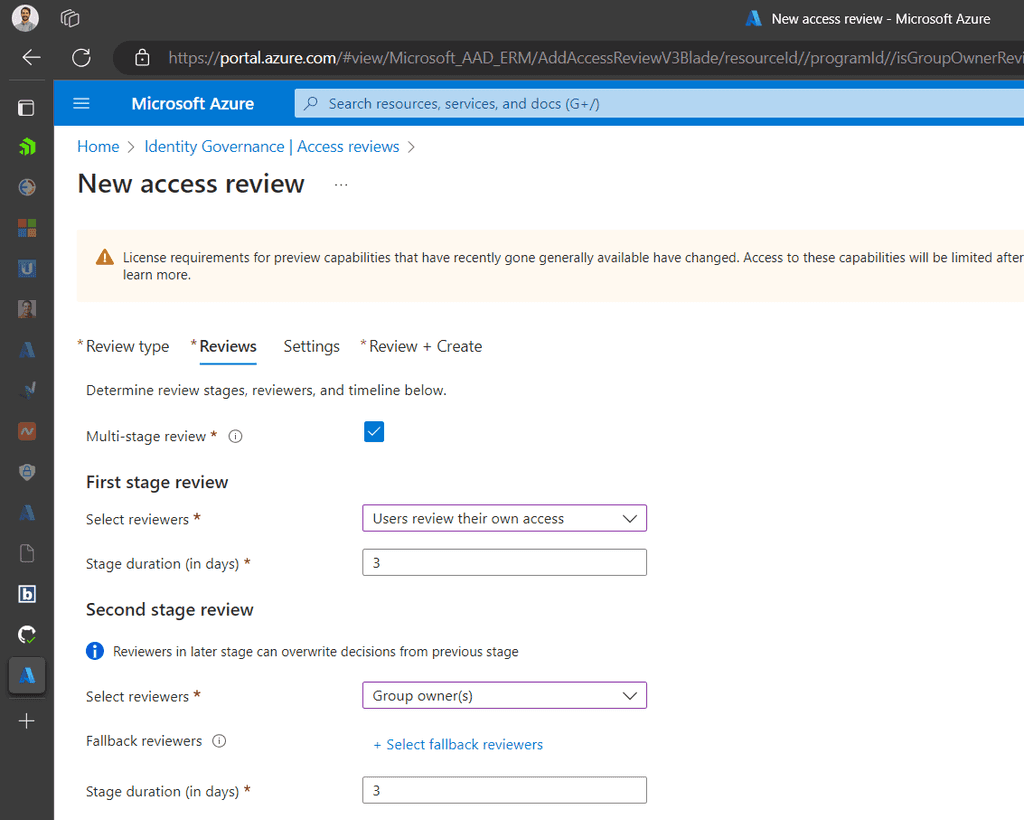

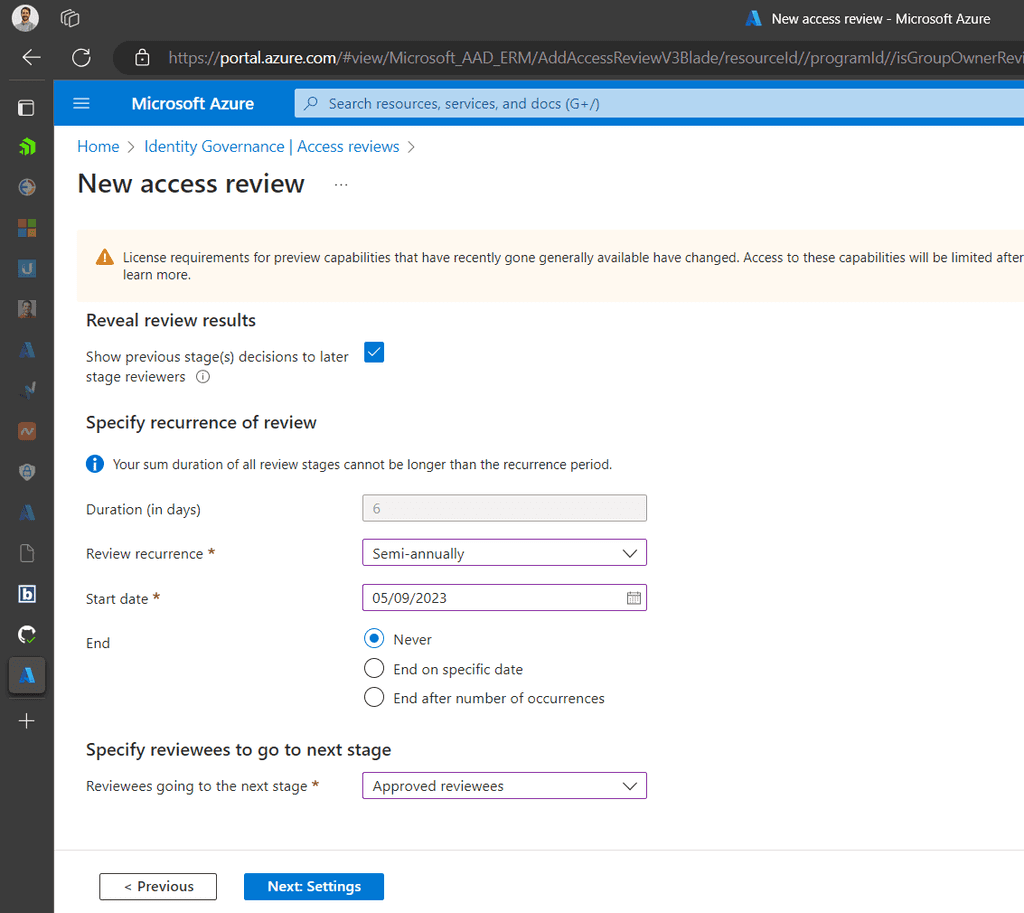

Steps to Create an Access Package

-

Open Azure Portal: Navigate to Entra ID | Identity Governance | Access packages.

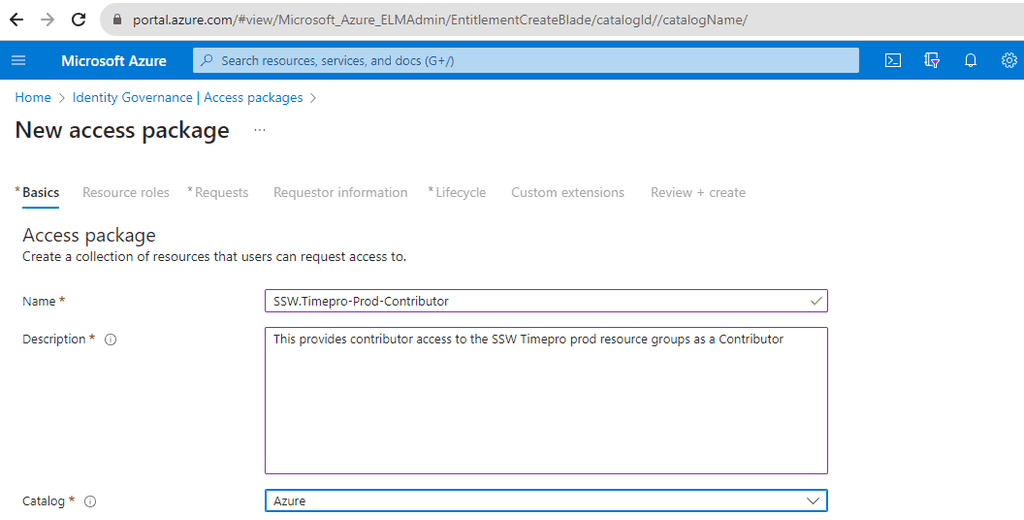

Figure: Navigate to Azure portal | Access packages | New Access package - New Access Package: Click on

+ New access package. -

Fill Details: Provide a name, description, and select the catalog for the access package.

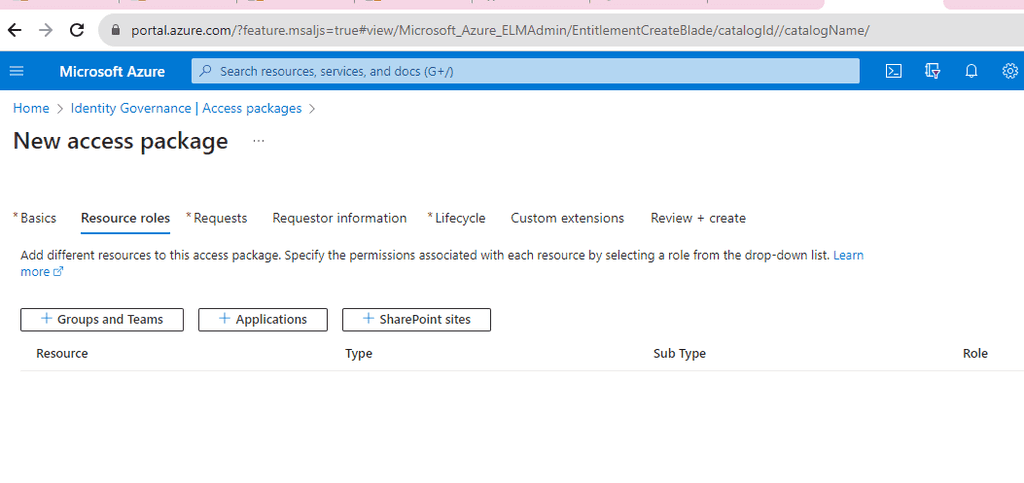

Figure: Fill out the details and choose a catalog -

Define Resources: Add the resources (applications, groups, SharePoint sites) that users will get access to when they request this package.

Figure: Add the required resources -

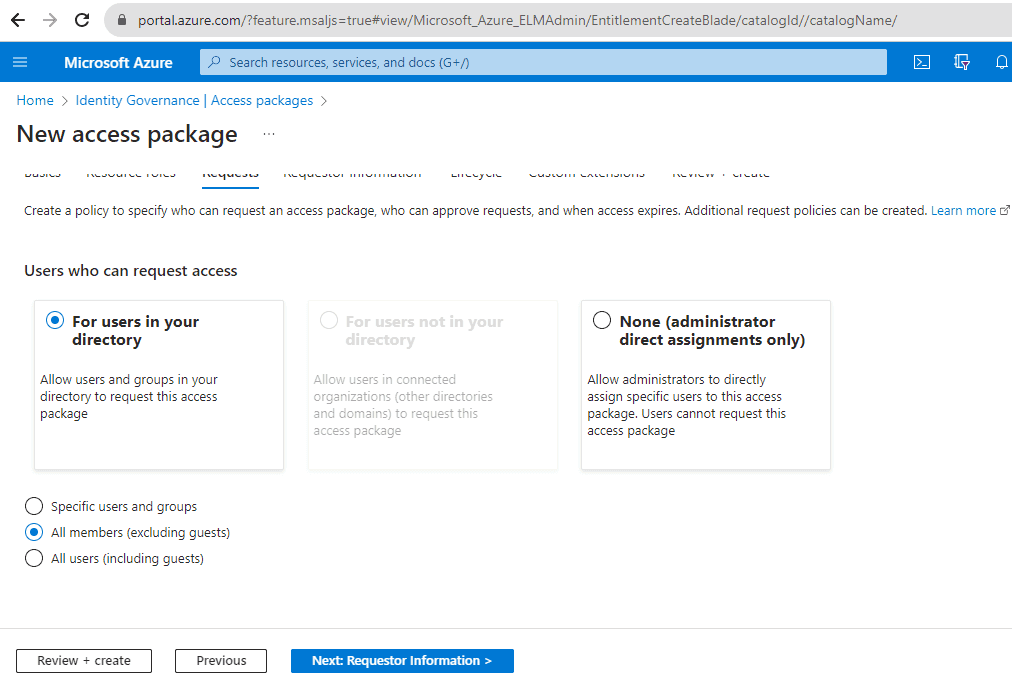

Set Policies: Define who can request the package, approval workflows, duration of access, and other settings.

Figure: Choose the types of users that can request access

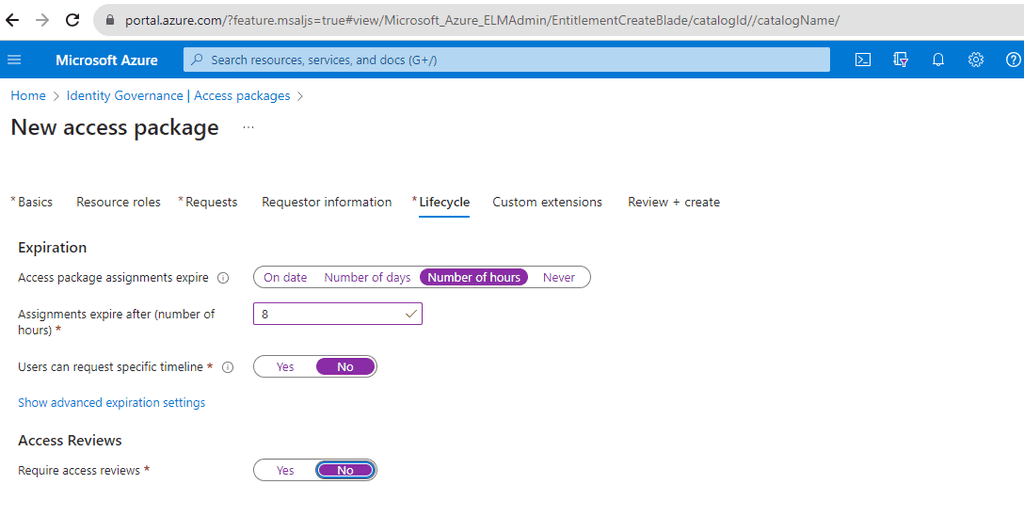

Figure: Choose policies that match the level of access -

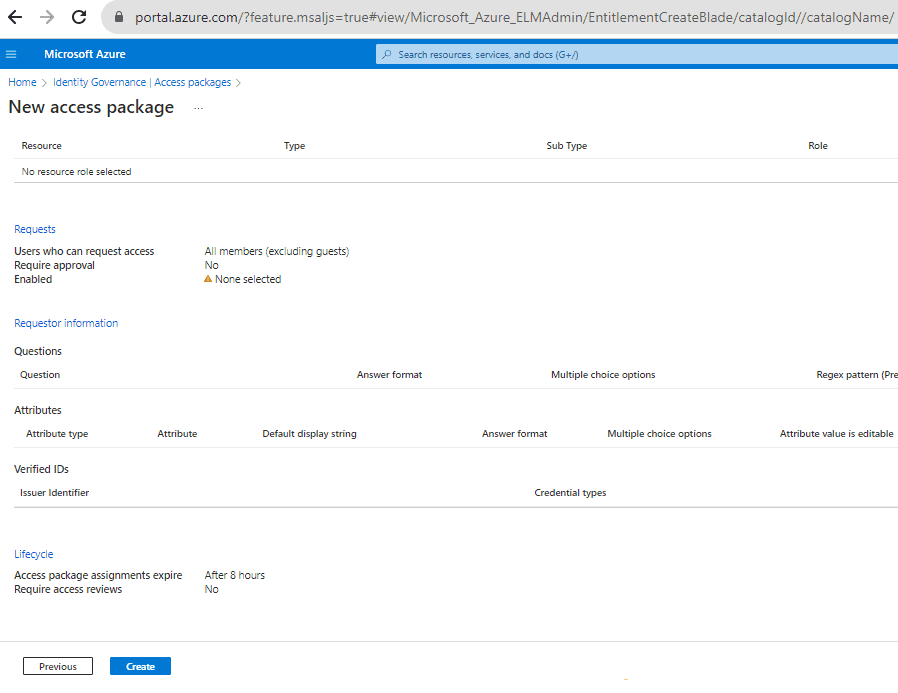

Review and Create: Ensure all details are correct and then create the access package.

Figure: Review the settings and create the policy

-

Azure transactions are CHEAP. You get tens of thousands for just a few cents. What is dangerous though is that it is very easy to have your application generate hundreds of thousands of transactions a day.

Every call to Windows Azure Blobs, Tables and Queues count as 1 transaction. Windows Azure diagnostic logs, performance counters, trace statements and IIS logs are written to Table Storage or Blob Storage.

If you are unaware of this, it can quickly add up and either burn through your free trial account, or even create a large unexpected bill.

Note: Azure Storage Transactions do not count calls to SQL Azure.

Be aware that Azure Functions Queue and Event Hub Triggers can cause lots of transactions

Both of these triggers can cause a lot of transactions. Typically this is controlled by the batch size you configure. What happens is that the Functions runtime needs to read and write a watermark into blob storage. This is a record of what items have been read from the Queue or Event Hub. So the bigger the batch size, the less often these records get written. If you expect your function to potentially be triggered a lot, make the batch size bigger.

Many people set the batch size to 1, which results in ~2 storage transactions per trigger, which can get expensive quite fast.

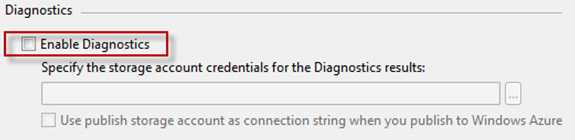

Ensure that Diagnostics are Disabled for your web and worker roles

Having Diagnostics enabled can contribute 25 transactions per minute, this is 36,000 transactions per day.

Question for Microsoft: Is this per Web Role?

Figure: Check the properties of your web and worker role configuration files

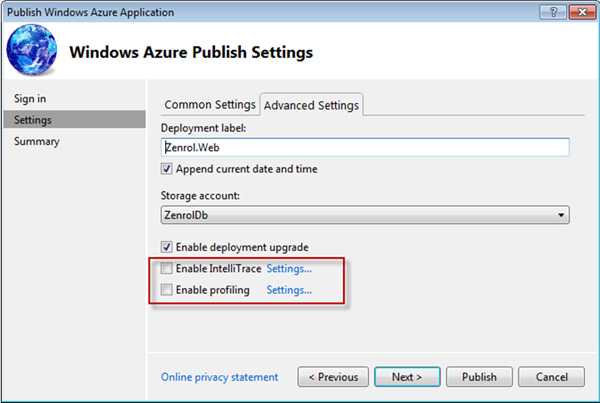

Figure: Disable diagnostics Disable IntelliTrace and Profiling

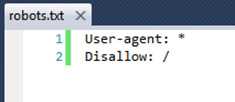

Figure: When publishing, ensure that IntelliTrace and Profiling are both disabled Robots.txt

Search bots crawling your site to index it will lead to a lot of transactions. Especially for web "applications" that do not need to be searchable, use Robot.txt to save transactions.

Figure: Place robots.txt in the root of your site to control search engine indexing Continuous Deployment

When deploying to Azure, the deployment package is loaded into the Storage Account. This will also contribute to the transaction count.

If you have enabled continuous deployment to Azure, you will need to monitor your transaction usage carefully.

References

Microsoft Azure SQL Database has built-in backups to support self-service Point in Time Restore and Geo-Restore for Basic, Standard, and Premium service tiers.

You should use the built-in automatic backup in Azure SQL Database versus using T-SQL.

T-SQL: CREATE DATABASE destinationdatabasenameAS COPY OF[source_server_name].sourcedatabasename

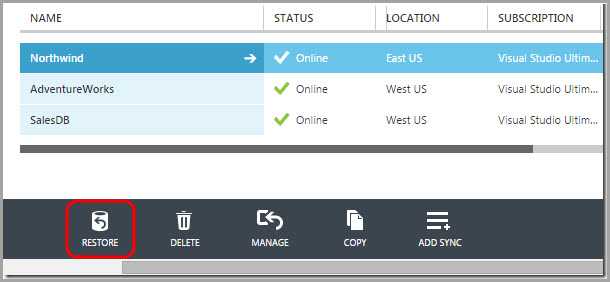

Figure: Bad example - Using T-SQL to restore your database

Figure: Good example - Using the built-in SQL Azure Database automatic backup system to restore your database Azure SQL Database automatically creates backups of every active database using the following schedule: Full database backup once a week, differential database backups once a day, and transaction log backups every 5 minutes. The full and differential backups are replicated across regions to ensure the availability of the backups in the event of a disaster.

Backup Storage

Backup storage is the storage associated with your automated database backups that are used for Point in Time Restore and Geo-Restore. Azure SQL Database provides up to 200% of your maximum provisioned database storage of backup storage at no additional cost.

Service Tier Geo-Restore Self-Service Point in Time Restore Backup Retention Period Restore a Deleted Database Web Not supported Not supported n/a n/a Business Not supported Not supported n/a n/a Basic Supported Supported 7 days √ Standard Supported Supported 14 days √ Premium Supported Supported 35 days √ Figure: All the modern SQL Azure Service Tiers support back up. Web and Business tiers are being retired and do not support backup. Check Web and Business Edition Sunset FAQ for up-to-date retention periods

Learn more on Microsoft documentation:

Other ways to back up Azure SQL Database:

- Microsoft Blog - Different ways to Backup your Windows Azure SQL Database

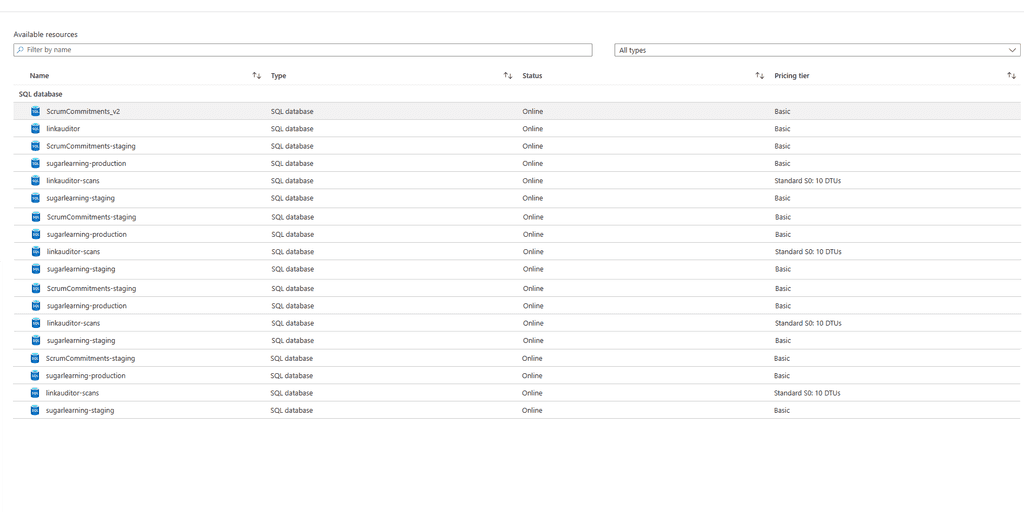

Choosing the right Azure database model is essential for building scalable, cost-effective, and efficient multi-tenant applications. With Azure SQL offering multiple deployment options, selecting the optimal model requires a clear understanding of your application's tenant structure, usage patterns, and data isolation requirements.

What's the pain?

Many developers struggle to choose the right database model for multi-tenant applications. Picking the wrong option can lead to unnecessary costs, scalability issues, or poor performance. For example:

- A SaaS application serving 200 tenants may use a single database per tenant, leading to high costs and underutilized resources

- Conversely, pooling all tenants in one shared database might create security risks or performance bottlenecks

Tip: Even before you choose a database model, consider if your application truly needs a multi-tenant architecture. Single-tenant architecture might be simpler for some use cases.

Common Mistakes When Choosing Database Models

One-Size-Fits-All Approach

Some developers apply the same database model regardless of tenant requirements, workload patterns, or scale. This often leads to inefficiencies and increased costs.

Figure: Bad example - Using a dedicated database for each of your 500 small tenants results in excessive costs and management overhead Ignoring Security Considerations

Pooling tenants in a shared database without proper security measures can expose sensitive data across tenants.

-- No row-level security, just application-level filtering SELECT * FROM SalesData WHERE TenantID = @TenantID;Figure: Bad Example – No row-level security exposes all tenant data if a query bypasses application logic

Azure SQL Database Models - Your Options

Azure SQL offers several deployment models to suit different scenarios. Here's how to choose the right one for your needs:

Option A: Single Database per Tenant

Each tenant gets its own dedicated Azure SQL Database.

✅ Advantages:

- Full tenant isolation

- Independent scaling for each tenant

- Simplified backup/restore for individual tenants

❌ Disadvantages:

- High costs as tenant count grows

- Management complexity increases with more databases

- Inefficient resource utilization for inactive tenants

Best for:

- Applications with strict data isolation requirements (e.g., regulatory compliance)

- Scenarios with few tenants or high-value clients

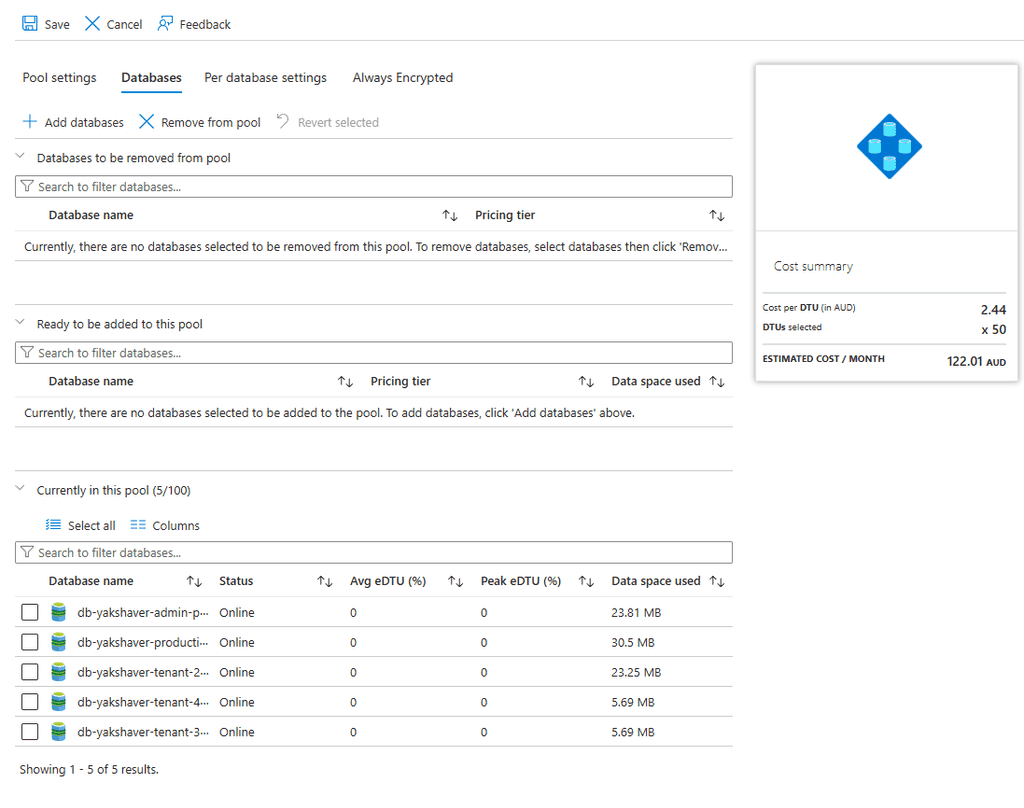

Option B: Elastic Pool (Recommended for most scenarios)

Multiple single-tenant databases share resources within an elastic pool.

✅ Advantages:

- Cost-effective for variable workloads

- Maintains data isolation between tenants

- Dynamically allocates resources based on demand

❌ Disadvantages:

- Requires careful pool sizing to avoid over/under-provisioning

- Limited cost savings for very inactive tenants

Best for:

- Applications with variable usage patterns across tenants

- Scenarios where some tenants are inactive at any given time

- Both small and large numbers of tenants, depending on workload characteristics

Figure: Good example - Elastic Pool efficiently distributes resources among databases with variable workloads Option C: Shared Multi-Tenant Database

All tenants share a single database with a schema that includes tenant identifiers.

✅ Advantages:

- Lowest per-tenant cost

- Simplified management and deployment

- Efficient resource utilization

❌ Disadvantages:

- Reduced tenant isolation

- Security implementation requires proper Row-Level Security (RLS)

- Harder to restore individual tenant data

Best for:

- Applications with hundreds or thousands of tenants

- Scenarios where cost efficiency is paramount and strict isolation isn't required

-- Implementing Row-Level Security in a shared database CREATE SECURITY POLICY TenantFilter ADD FILTER PREDICATE rls.fn_TenantAccessPredicate(TenantID) ON dbo.SalesData;Figure: Good Example – Row-level security ensures tenant isolation at the database level

Option D: Serverless

Azure SQL's serverless tier automatically scales compute resources based on workload demand.

✅ Advantages:

- Ideal for unpredictable workloads or inactive periods

- Pay only for active usage (per-second billing)

- Automatic pause/resume reduces costs during inactivity

❌ Disadvantages:

- Cold start delays when resuming from pause

- Limited scaling range (0.5-16 vCores in General Purpose tier)

Best for:

- Development/test environments

- Early-stage SaaS applications with uncertain growth patterns

Tip: Serverless is perfect for development environments where the database is only used during working hours, automatically pausing outside of these times to save costs.

Option E: Hyperscale

The Hyperscale service tier supports very large databases and extreme scalability requirements.

✅ Advantages:

- Supports databases up to 100 TB

- Fast backups and restores

- Near-instant read scale-out capabilities

❌ Disadvantages:

- Higher costs compared to other tiers

- Overhead in managing very large datasets

Best for:

- Applications requiring massive storage or extreme scalability (e.g., analytics platforms)

Option F: Hybrid Approaches

Combine multiple models or technologies to address diverse requirements.

✅ Advantages:

- Optimized cost-to-performance ratio for different tenant tiers

- Flexibility to meet various tenant requirements

- Ability to scale different components independently

❌ Disadvantages:

- Increased architectural complexity

- Higher operational overhead managing multiple systems

- Potential for increased development costs

Examples:

- Use Azure SQL Database for relational data and Cosmos DB for globally distributed NoSQL data

-

Tiered service levels:

- High-value tenants on dedicated databases

- Low-value tenants in a shared database

Best for:

- SaaS platforms with diverse tenant profiles or tiered pricing models

- Applications with mixed data access patterns

- Systems requiring both OLTP and OLAP capabilities

Cost Optimization Example: A financial services platform uses a hybrid approach where premium clients ($10,000+/month) get dedicated databases with guaranteed performance, while standard clients share elastic pools, and entry-level clients use a multi-tenant database with row-level security. This approach optimizes both performance and cost across different customer segments.

Decision Framework

When choosing the right Azure database model for your multi-tenant application, use this decision framework:

Step 1: Determine your isolation requirements

- Do you need physical isolation (dedicated DB) or is logical isolation (RLS) sufficient?

- Are there regulatory/compliance requirements?

Step 2: Analyze your tenant workload patterns

- Are workloads predictable or variable?

- Do tenants have similar usage patterns or different ones?

Step 3: Consider your scaling requirements

- How many tenants do you expect now and in the future?

- What is the expected data volume per tenant?

Step 4: Evaluate your budget constraints

- What is your per-tenant cost target?

- Are you optimizing for predictable costs or minimal costs?

Cost Considerations

When planning costs, consider more than just compute pricing:

- Compute Resources: vCores/DTUs

- Storage Costs: Allocated storage and backup retention

- IO Operations: Read/write operations can add up in high-throughput applications

- Network Egress: Data transfer out of Azure regions incurs charges

Example Scenario: A SaaS provider with 50 small business clients switched from dedicated databases (S2 tier, $75/month each) to an Elastic Pool (S2, 50 DTUs/database, 200 total DTUs at $560/month), reducing costs by over 85% while maintaining performance and isolation.

Security Best Practices

Even with separate databases, proper security measures are essential:

- Use Azure AD Authentication for centralized identity management

- Implement Row-Level Security (RLS) in shared databases

- Enable Transparent Data Encryption (TDE) for all databases

- Use Always Encrypted for sensitive data fields

- Implement proper audit logging with Azure SQL Auditing

Conclusion

Choosing the right Azure database model requires balancing cost, scalability, and isolation needs. While single databases offer maximum isolation, elastic pools and shared databases provide cost-efficient alternatives for multi-tenancy at scale. Serverless tiers excel in handling unpredictable workloads, while Hyperscale supports extreme scalability demands.

By carefully evaluating your application's requirements and leveraging Azure's diverse offerings, you can design an optimal architecture that scales efficiently while controlling costs.

Note: Always consider future growth patterns when selecting your database model. It's easier to start with a more isolated model and consolidate later than to separate tenants after they're combined.

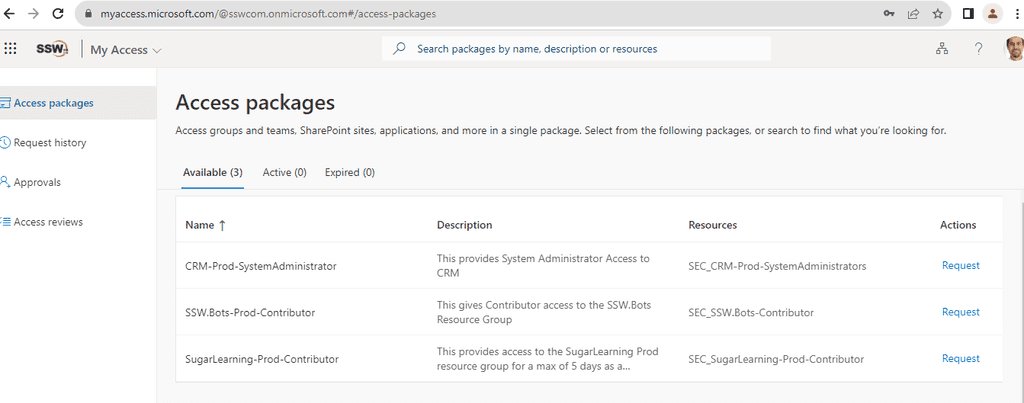

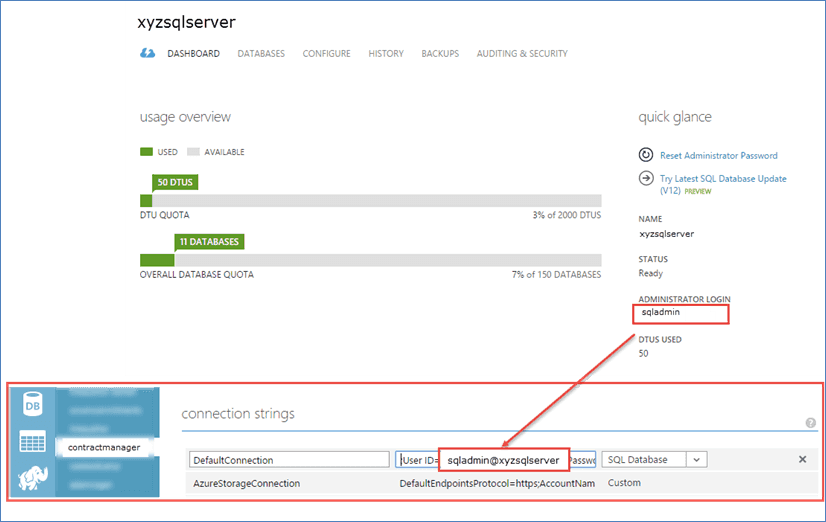

Do you configure your web applications to use application specific accounts for database access?

An application's database access profile should be as restricted as possible, so that in the case that it is compromised, the damage will be limited.

Application database access should be also be restricted to only the application's database, and none of the other databases on the server

Figure: Bad example – Contract Manager Web Application using the administrator login in its connection string Most web applications need full read and write access to one database. In the case of EF Code first migrations, they might also need DDL admin rights. These roles are built in database roles:

db_ddladmin Members of the db_ddladmin fixed database role can run any Data Definition Language (DDL) command in a database. db_datawriter Members of the db_datawriter fixed database role can add, delete, or change data in all user tables. db_datareader Members of the db_datareader fixed database role can read all data from all user tables. Table: Database roles taken from Database-Level Roles

If you are running a web application on Azure as you should configure you application to use its own specific account that has some restrictions. The following script demonstrates setting up an sql user for myappstaging and another for myappproduction that also use EF code first migrations:

USE master GO CREATE LOGIN myappstaging WITH PASSWORD = '************'; GO CREATE USER myappstaging FROM LOGIN myappstaging; GO USE myapp-staging-db; GO CREATE USER myappstaging FROM LOGIN myappstaging; GO EXEC sp_addrolemember 'db_datareader', myappstaging; EXEC sp_addrolemember 'db_datawriter', myappstaging; EXEC sp_addrolemember 'db_ddladmin', myappstaging;Figure: Example script to create a service user for myappstaging

Note: If you are using stored procedures, you will also need to grant execute permissions to the user. E.g.

GRANT EXECUTE TO myappstagingData Source=tcp:xyzsqlserver.database.windows.net,1433; Initial Catalog=myapp-staging-db; User ID=myappstaging@xyzsqlserver; Password='*************'

Figure: Example connection string

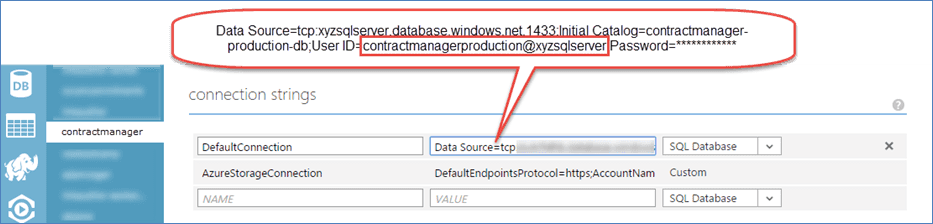

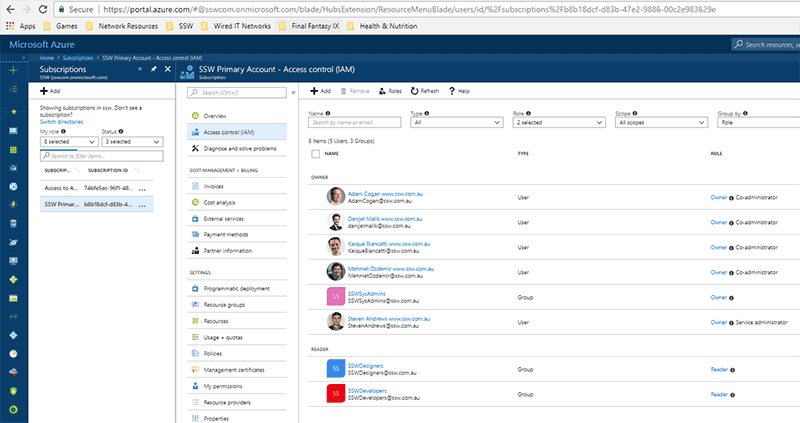

Like other services, it is important that your company has a structured and secure approach to managing Azure Permissions.

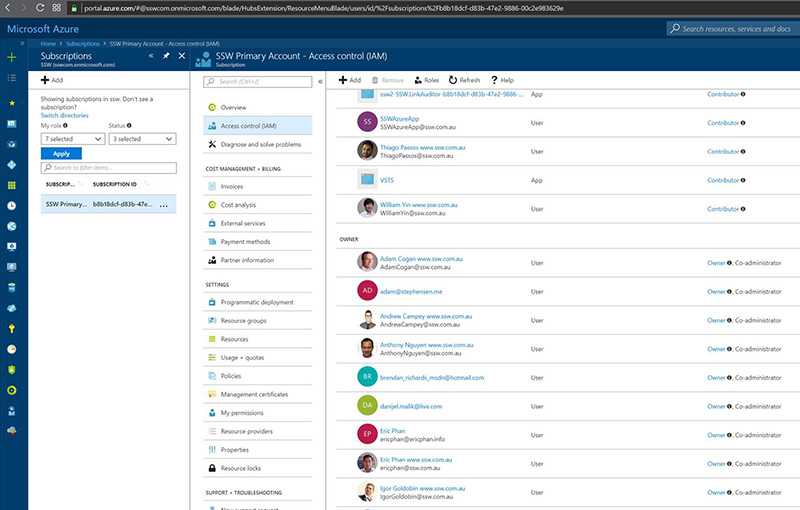

First a little understanding of how Azure permissions work. For each subscription, there is an Access Control (IAM) section that will allow you to grant overall permissions to this Azure subscription. It is important to remember that any access that is given under Subscriptions | "Subscription Name" | Access Control (IAM), will apply to all Resource Groups within the Subscription.

Figure: Good example - Only Administrators that will be managing overall permissions and content have been given Owner/Co-administrator From the above image, only the main Administrators have been given Owner/Co-administrator access, all other users within the SSWDesigners and SSWDevelopers Security Groups have been given Reader access. The SSWSysAdmins Security group has also been included as an owner which will assist in case permissions are accidentally stripped from the current Owners.

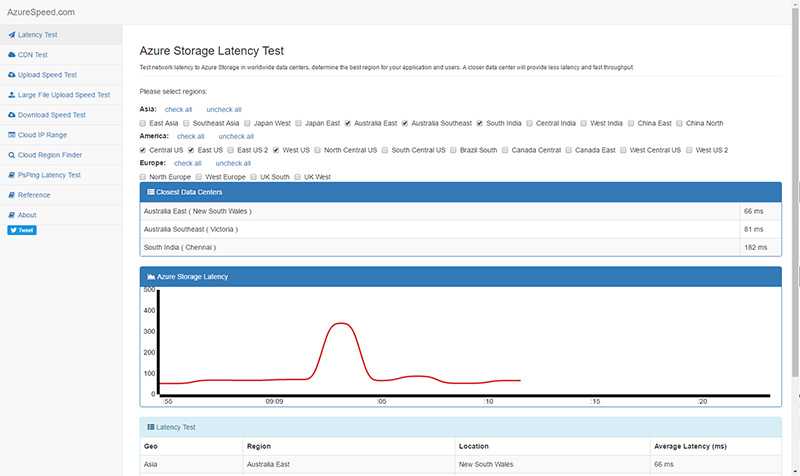

Here's a cool site that tests the latency of Azure Data Centres from your machine. It can be used to work out which Azure Data Centre is best for your project based on the target user audience: http://www.azurespeed.com

As well as testing latency it has additional tests that come in handy like:

- CDN Test

- Upload Test

- Large File Upload Test

- Download Test

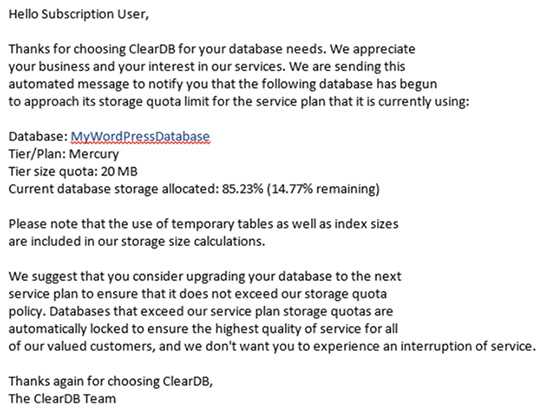

Figure: AzureSpeed.com example Setting up a WordPress site hosted on Windows Azure is easy and free, but you only get 20Mb of MySql data on the free plan.

Figure: Once you approach your 20Mb limit you will receive a warning that your database may be suspended

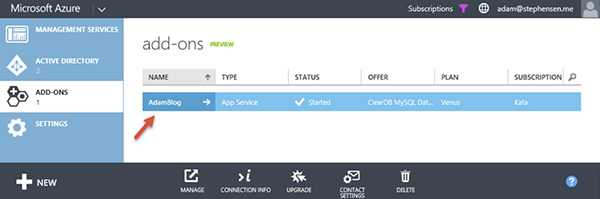

Figure: If you are serious about your blog and including content on it, you should configure a paid Azure Add-on to host your MySQL Database when you set it up

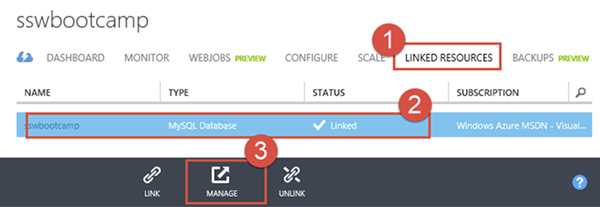

Figure: If you have already created your blog, navigate to your website within the Azure portal, select 'Linked Resources', select the line for the MySQL Database and click the 'Manage link'. This will open the ClearDb portal. Go to the Dashboard and click 'Upgrade' References: John Papa: Tips for WordPress on Azure.

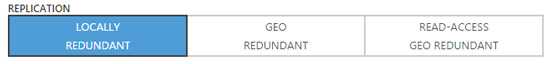

Data in Azure Storage accounts is protected by replication. Deciding how far to replicate it is a balance between safety and cost.

Figure: It is important to balance safety and pricing when choosing the right replication strategy for Azure Storage Accounts Locally redundant storage (LRS)

- Maintains three copies of your data.

- Is replicated three times within a single facility in a single region.

- Protects your data from normal hardware failures, but not from the failure of a single facility.

- Less expensive than GRS

-

Use when:

- Data is of low importance – e.g. for test websites, or testing virtual machines

- Data can be easily reconstructed

- Data is non-critical

- Data governance requirements restrict data to a single region

Geo-redundant storage (GRS)

- The default when you create storage accounts.

- Maintains six copies of your data.

- Data is replicated three times within the primary region, and is also replicated three times in a secondary region hundreds of miles away from the primary region

- In the event of a failure at the primary region, Azure Storage will failover to the secondary region.

- Ensures that your data is durable in two separate regions.

-

Use when:

- Data cannot be recovered if lost

Read access geo-redundant storage (RA-GRS)

- Replicates your data to a secondary geographic location (same as GRS)

- Provides read access to your data in the secondary location

- Allows you to access your data from either the primary or the secondary location, in the event that one location becomes unavailable.

-

Use when:

- Data is critical, and access is required to both the primary and the secondary regions

More information:

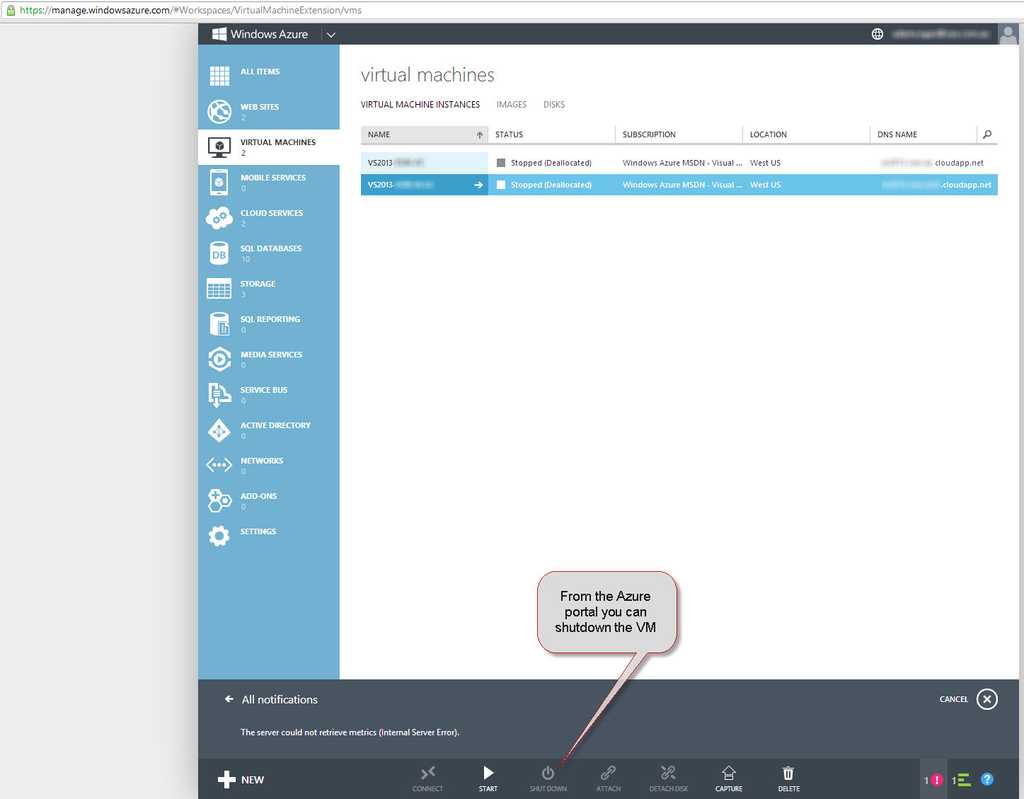

Often we use Azure VM's for presentations, training and development. As there is a cost involved to store and use the VM it is important to ensure that the VM is shutdown when it is no longer required.

Shutting down the VM will prevent compute charges from incurring. There is still a cost involved for the storage of the VHD files but these charges are a lot less than the compute charges.

Please note that is for Visual Studio subscriptions.

You can shutdown the VM by either making a remote desktop connection to the VM and shutdown server or using Azure portal to shutdown the VM.

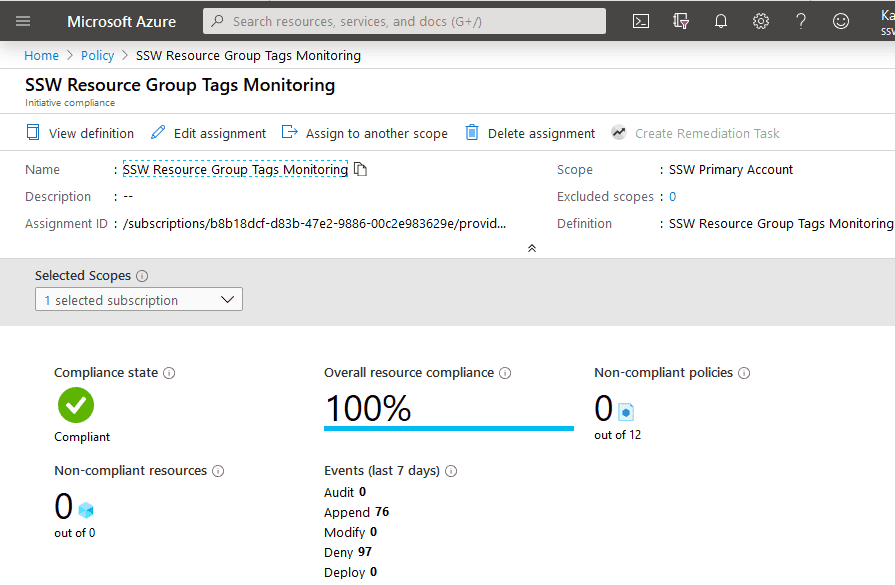

Figure: Azure Portal If you use a strong naming convention and is using Tags to its full extent in Azure, then it is time for the next step.

Azure Policies is a strong tool to help in governing your Azure subscription. With it, you make it easier to fall in The Pit of Success when creating or updating new resources. Some features of it:

- You can deny creation of a Resource Group that does not comply with the naming standards

- You can deny creation of a Resource if it doesn't possess the mandatory tags

- You can append tags to newly created Resource Groups

- You can audit the usage of specific VMs or SKUs in your Azure environment

- You can allow only a set of SKUs within Azure

Azure Policy allow for making of initiatives (group full of policies) that try to achieve an objective e.g. a initiative to audit all tags within a subscription, to allow creation of only some types of VMs, etc...

You can delve deep on it here: https://docs.microsoft.com/en-us/azure/governance/policy/overview

Azure Machine Learning provides an easy to use yet feature rich platform for conducting machine learning experiments. This introduction provides an overview of ML Studio functionality, and how it can be used to model and predict interesting rule world problems.

Azure Notebooks offer a simple, transparent and complete technology for analysing data and presenting the results. They are quickly become the default way to conduct data analysis in the scientific and academic community.

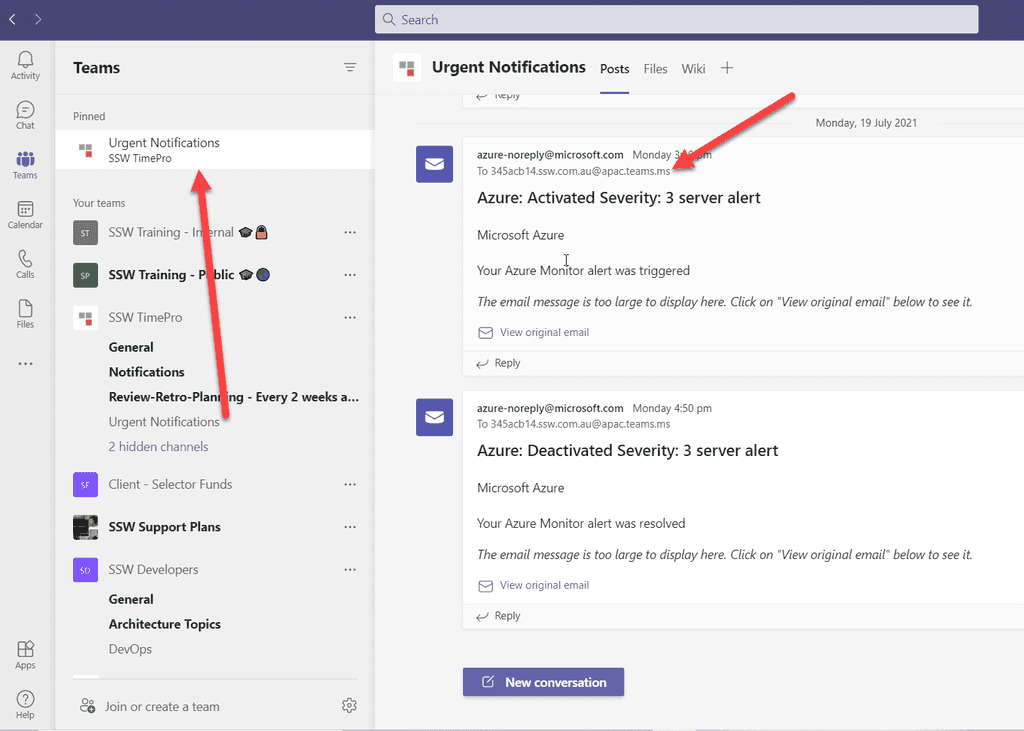

Most sysadmins set up Azure alerts to go to a few people and then they have given themselves a job to forward the email to the right people every time there is a problem. What happens when they are away and why do they need to keep adding and removing emails when people join and leave the team.

There is a better way. Have those emails go to the Team. Every team channel has a specific email address and then Team members can pin that. This way these important emails are sitting right at the top.

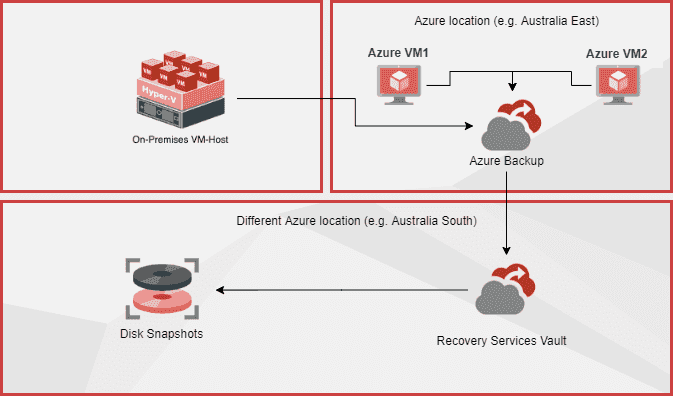

Azure Site Recovery is the best way to ensure business continuity by keeping business apps and workloads running during outages. It is one of the fastest ways to get redundancy for your VMs on a secondary location. For on-premises local backup see Do you know why to use Data Protection Manager?

Ensuring business continuity is priority for the System Administrator team, and is part of any good disaster recovery plan. Azure Site Recovery allows an organization to replicate and sync Virtual Machines from on-premises (or even different Azure regions) to Azure. This replication can be set to whatever frequency the organization deems to be required, from Daily/Weekly through to constant replication.

When there is an issue, restoration can be in minutes - you just switch over to the VMs in Azure! They will keep the business running while the crisis is dealt with. The server will be in the same state as the last backup. Or if the issue is software you can restore an earlier version of the virtual machine within a few minutes as well.

Figure: Azure Backup and Site Recovery backs up on-premises and Azure Virtual Machines Azure App Services are powerful and easy to use. Lots of developers choose it as the default hosting option for their Web Apps and Web APIs. However, to set up a staging environment and manage the deployment for the staging environment can be tricky.

We can choose to create a second resource group or subscription to host our staging resources. As a great alternative, we can use a fully-fledged feature on App Service called deployment slot.

How to use deployment slots

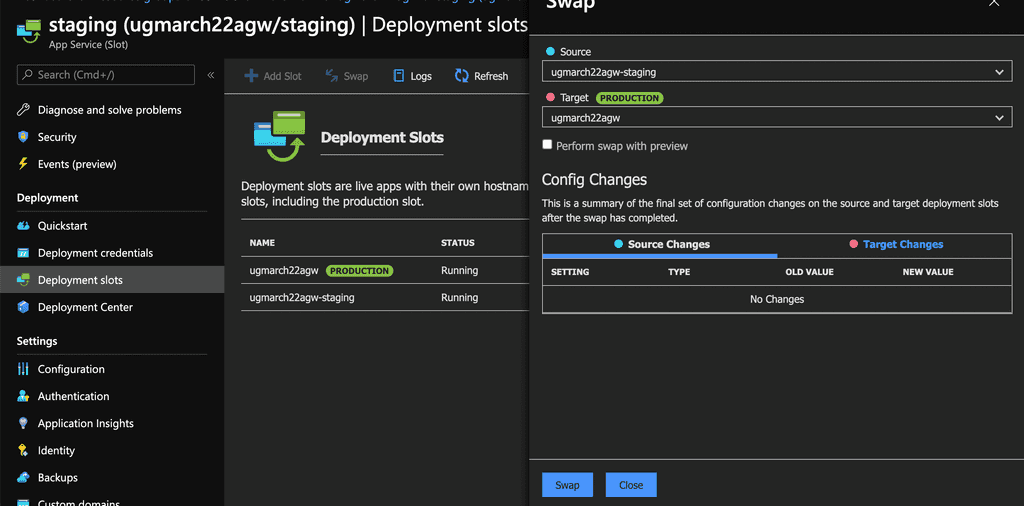

To start using slot deployment, we can spin up another web app – it sits next to your original web app with a different url. Your production url could be production.website.com and the corresponding staging slot is staging.website.com. Your users would access your production web app while you can deploy a new version of the web app to your staging slot. That way, the updated web app can be tested before it goes live. You can easily swap the staging and production slot with only a turnkey configuration. See figure 1 to 5 below.

Other benefits of deployment slots

The benefit of using deployment slot is that if anything goes wrong on your production web app, you can easily roll it back by swapping with the staging slot – your previous version of web app sits on the staging slot – ready to be swapped back anytime before a newer version is pushed to staging slot.

Deployment slot can also work hand in hand with your blue green deployment strategy – you can opt user to the beta feature on the staging slot gradually.

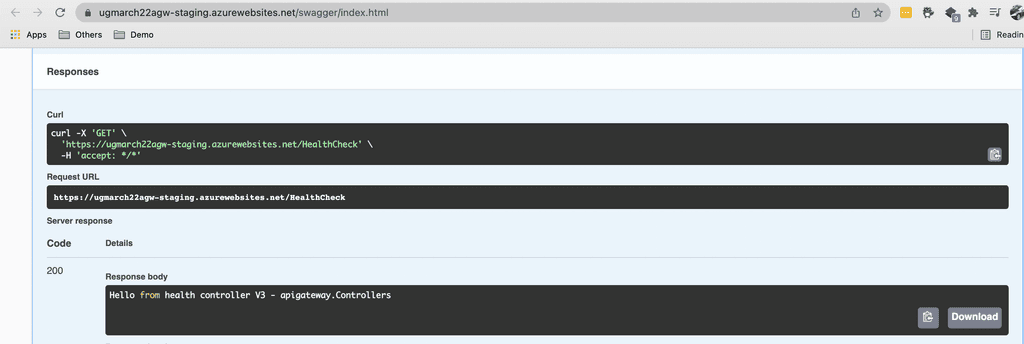

Figure 1: Before Swap - Production slot

Figure 2: Before swap - Staging slot

Figure 3: Swap the slot with one click

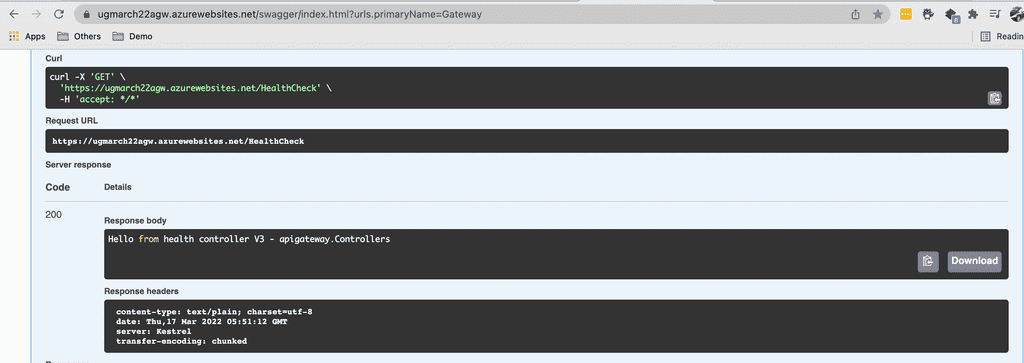

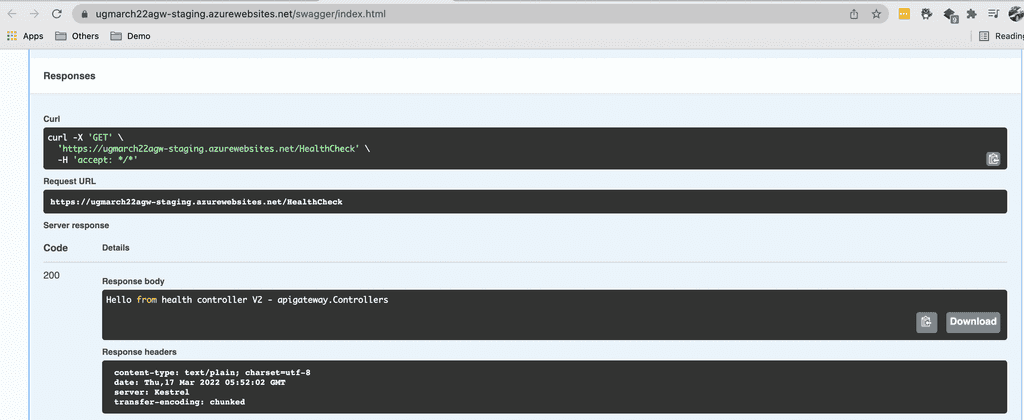

Figure 4: After swap – Production slot

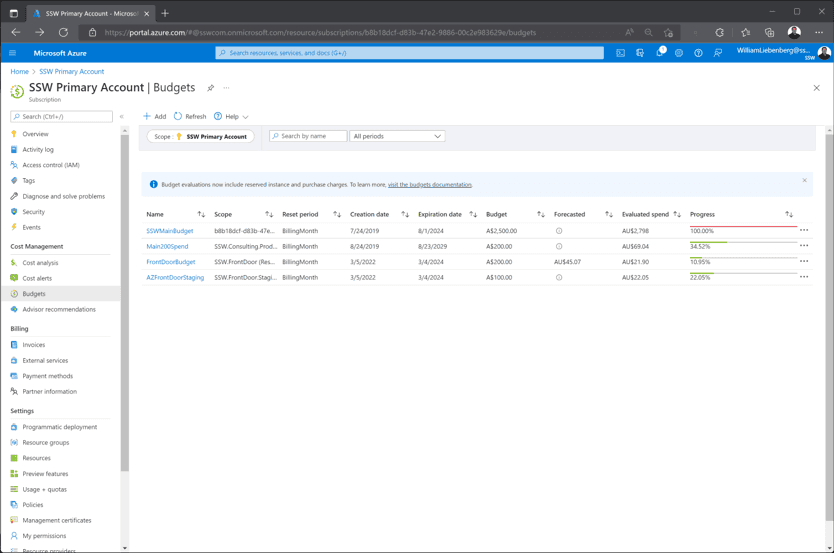

Figure 5: After swap – Staging slot Azure costs can be difficult to figure out and it is important to make sure there are no hidden surprises. To avoid bill shock, it is crucial to be informed. Once you are informed, then you can make the appropriate actions to reduce costs.

Let's have a look at the tools and processes that can be put in place to help manage Azure costs:

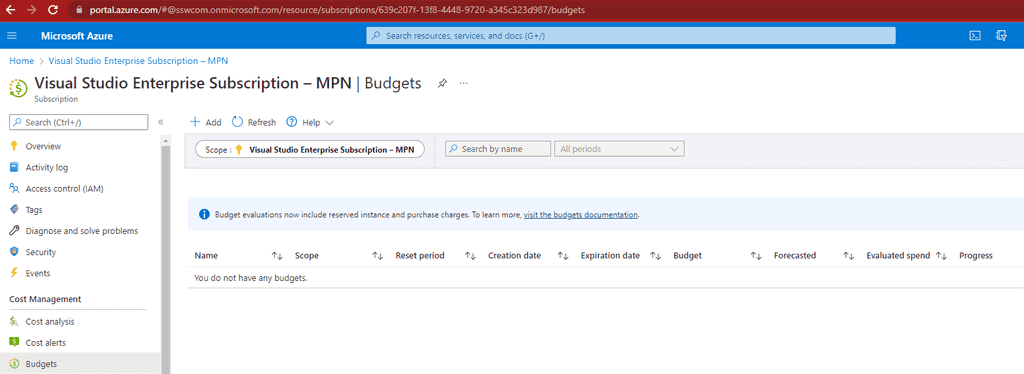

Video: Monitoring your Azure $ costs with Warwick Leahy (4 min)Budgets - Specify how much you aim to spend

Budgets are a tool that allow users to define how much money is spent on either an Azure Subscription or a specific resource group.

It is critical that an overarching budget, is set up for every subscription in your organization. The budget figure should define the maximum amount expected to be spent every month.

In addition to the overarching budget, specific apps can be targeted to monitor how much is being spent on them. Each time a new service is proposed, it is a good idea to have a cost conversation. Remember to jump into Azure and create a new budget to monitor that app.

Subscriptions - Split costing by environment

In addition to budgets, it's also a good idea to split costing between production and non-production scenarios. This can help diagnose why there are unexpected spend fluctuations e.g. performed load testing on the test site. Also, there are sometimes discounts that can be applied to a subscription only used for dev/test scenarios.

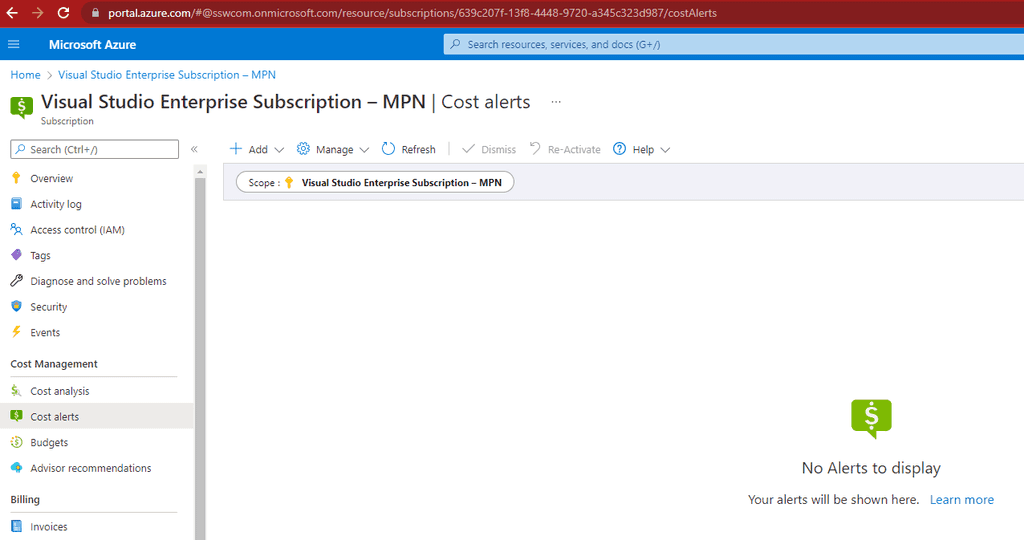

Figure: Bad example - No budget has been set up, disaster could be imminent and no one would know 🥶! Cost alerts - Make sure you know something has gone wrong

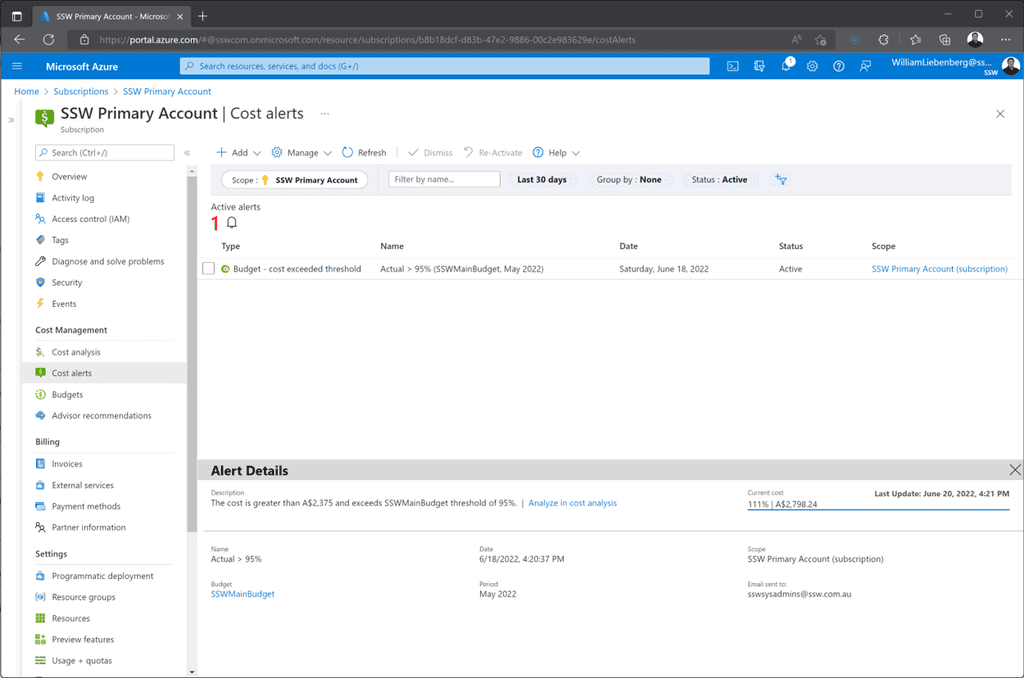

Once a budget is set up, cost alerts are the next important part for monitoring costs. Cost alerts define the notifications that are sent out when budget thresholds are being exceeded. For example, it might be set to send out an alert at 50%, 75%, 100% and 200%.

Make sure to set up alerts on all the thresholds that are important to the company.

If the company is really worried about costs, an Azure runbook could even be set up to disable resources after exceeding the budget limit. However, that isn't a very common practice since nobody wants the company website to go down randomly!

Cost analysis - What if you get an alert?

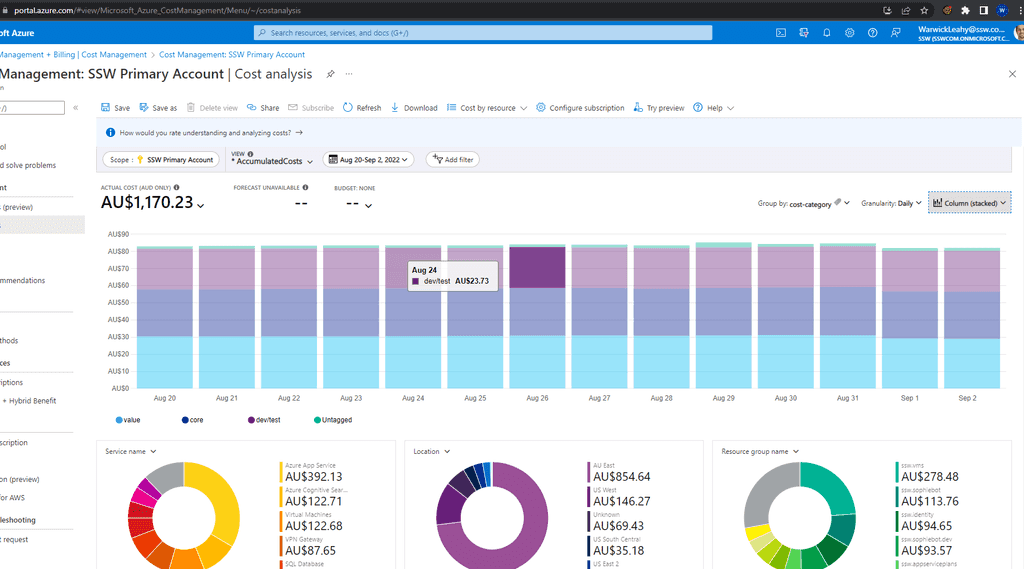

It can be scary when you get an alert. Luckily, Azure has a nice tool for managing costs, called Cost Analysis. You can break down costs by various attributes (e.g. resource group or resource type).

Using this tool helps identify where the problem lies, and then you can build a plan of attack for handling it.

Note: If your subscription is a Microsoft Sponsored account, you can't use the Cost Analysis tool to break down your costs, unfortunately. Microsoft has this planned for the future, but it's not here yet.

Tag your resources - Make it easier to track costs

Adding a tag of cost-category to each of your resources makes it easier to track costs over time. This will allow you to see the daily costs of your Azure resources based on whether they are Core, Value adding or Dev/Test. Then you can quickly turn off resources to save money if you require. It also helps you to see where money is disappearing.

Running a report every fortnight (grouped by the cost-category tab) will highlight any spikes in resource costs - daily reports are probably too noisy, while monthly reports have the potential for overspend to last too long.

Figure: Daily costs by category Approval process - Don't let just anyone create resources

Managing the monthly spend on cloud resources (e.g. Azure) is hard. It gets harder for the Spend Master (e.g. SysAdmins) when developers add services without sending an email to aid in reconciliation.

Developers often have high permissions (e.g. Contributor permissions to a Resource Group), and are able to create resources without the Spend Master knowing, and this will lead to budget and spending problems at the end of the billing cycle.

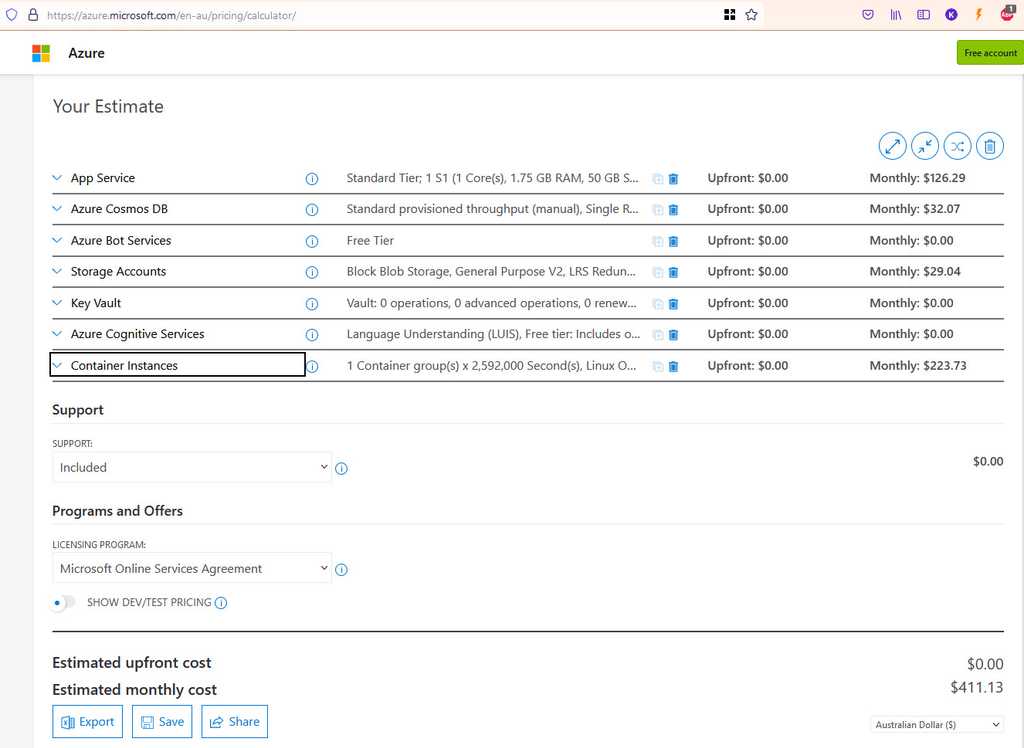

For everyone to be on the same page, the process a developer should follow is:

- Use the Azure calculator - Work out the monthly resource $ price

- Email the Spend Master with $ and a request to create resources in Azure, like the below:

To: Spend Master Subject: Purchase Please - Azure Resource Request for {{ PRODUCT/SERVICE }} Hi Spend Master aka SysAdmins

I would like you to provision a new Azure Resource for {{ PRODUCT/SERVICE }}. This is for {{ BUSINESS REASONS FOR RESOURCE }}

- Azure Resource needed: I would like to create a new App Service Plan

- Azure Calculator link: {{ LINK }}

- Environment: {{ DEV/STAGING/PROD }}

Project details:

- Project Name: A new project called {{ PROJECT NAME }}

- Project Description (The SysAdmin will copy this info to the Azure Tag): {{ DESCRIPTION }}

- Project URL (e.g. Azure DevOps / Github): {{ URL }}

Total:

${{ AMOUNT }} AUD + GST/month (${{ AMOUNT }} AUD + GST/year)

Tip: Make sure you include the annual cost, as per: Do you include the annual cost in quotes?

Figure: I generated the price from https://azure.microsoft.com/en-au/pricing/calculator - Please approve

< As per https://ssw.com.au/rules/azure-budgets >

- If the request is approved, remember to add a cost-category tag to the new resource once it is created

Make sure you include all resources you intend to create, even if they should be free. For example, you might create an App Service on an existing, shared App Service Plan. The Spend Master will still need to be aware of this, in case the App Service Plan needs to be scaled up.

Dealing with questions from Product Owners about expenses related to applications hosted on Azure can be a real headache 🥲

Get ready to empower your Product Owners! When it comes to Azure expenses, you want to be informed and monitor your costs. You can also have a solution that not only helps you understand where the spending is coming from, but also helps you find ways to optimize it. With Azure Cost Analysis, you can confidently provide your Product Owners with insights and recommendations that will save time and money, and make everyone's day a little brighter ✨

Video: Managing your Azure Costs | Bryden Oliver | SSW Rules (5 min)Always tackle the biggest 3 costs first. In most instances they will be upwards of 98% of your spend, particularly if you are in a wasteful environment. I have seen MANY projects where the largest cost by a significant margin was Application Insights.

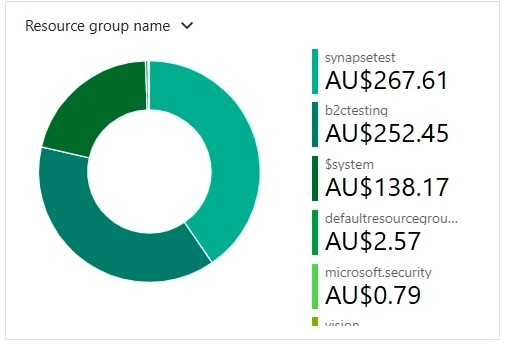

- Bryden Oliver, Azure expertAzure Cost Analysis gives you a detailed breakdown of where any Azure spending is coming from. It breaks down your cost by:

- Scoped Area e.g. a subscription

- Resource Group e.g. Northwind.Website

- Location e.g. Australia East

- Service type e.g. Azure App Service

Note: You can also 'filter by' any of these things to give you a narrowed down view.

Analysing the expenditure - Finding the big dogs 🐶

To optimize spending, analyze major costs in each category. Generally, it's a good idea to focus on the top 3 contributors - optimizing beyond that is usually not worth the effort.

Key questions to ask:

- Do you need that resource?

- Can you scale down?

- Can you refactor your application to consume less?

- Can you change the type of service or consumption model?

Scoped Area

The cumulative costs of a selected area over a given time period e.g. the cost of a subscription charted over the last year showing the period of higher or sudden growth during that time.

Figure: Azure Portal | Cost Analysis | Scoped Area Chart e.g. in February it was deployed and in August a marketing campaign caused more traffic Resource Group

The cost of each resource group in the scoped area e.g the cost of the Northwind website infrastructure.

Look at the most expensive resource group and try to reduce it. Ignore the tiny ones.

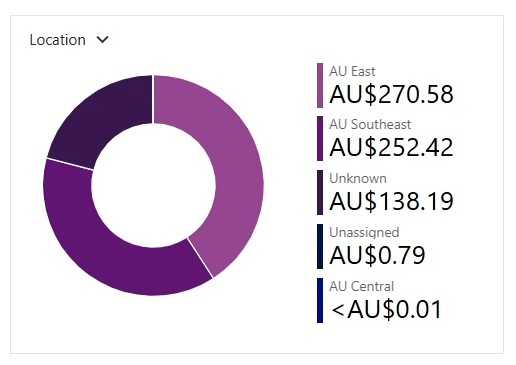

Figure: Azure Portal | Cost Analysis | Resource Group Breakdown Location

The cost of each location e.g. Australia East.

If you have your applications spread across multiple locations, this chart can help figure out if one of those locations is costing more than others. Consider scaling each location to the scale of usage in that location ⚖️.

Figure: Azure Portal | Cost Analysis | Location breakdown Service type

The cost of each service used e.g. Azure App Service.

If a specific service is costing a lot of money, consider if there is a service that might be better suited, or if that service can have its consumption model adapted to better fit the usage levels.

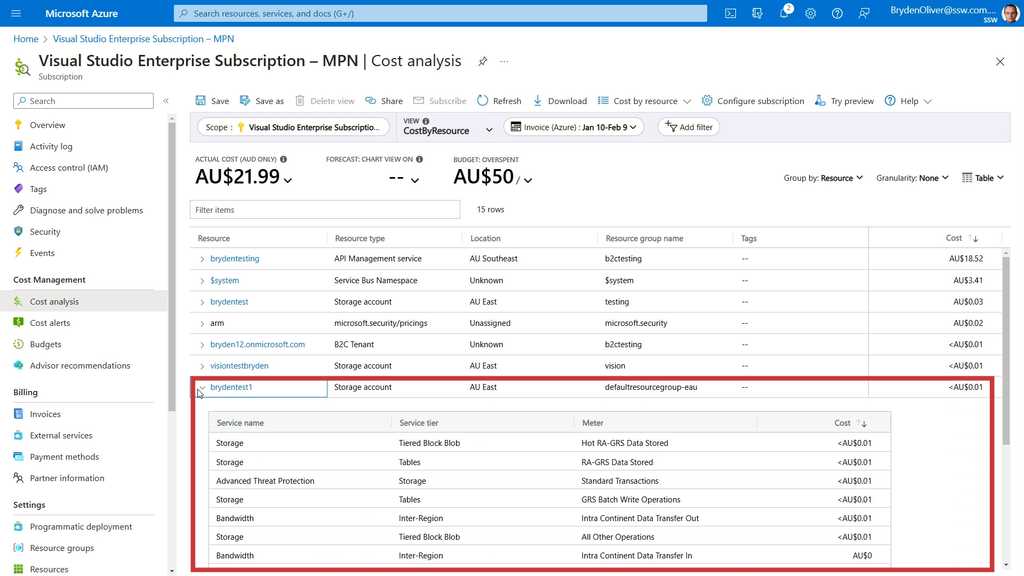

Figure: Azure Portal | Cost Analysis | Service type breakdown What if you suspect a specific resource is a problem?

The Azure Cost Analysis tool also allows for different views to be selected. If you think a specific resource is causing a problem, then select the "CostByResource" view and then you can view each aspect of a resource which is costing money. That way you can identify an area which can be improved 🎯.

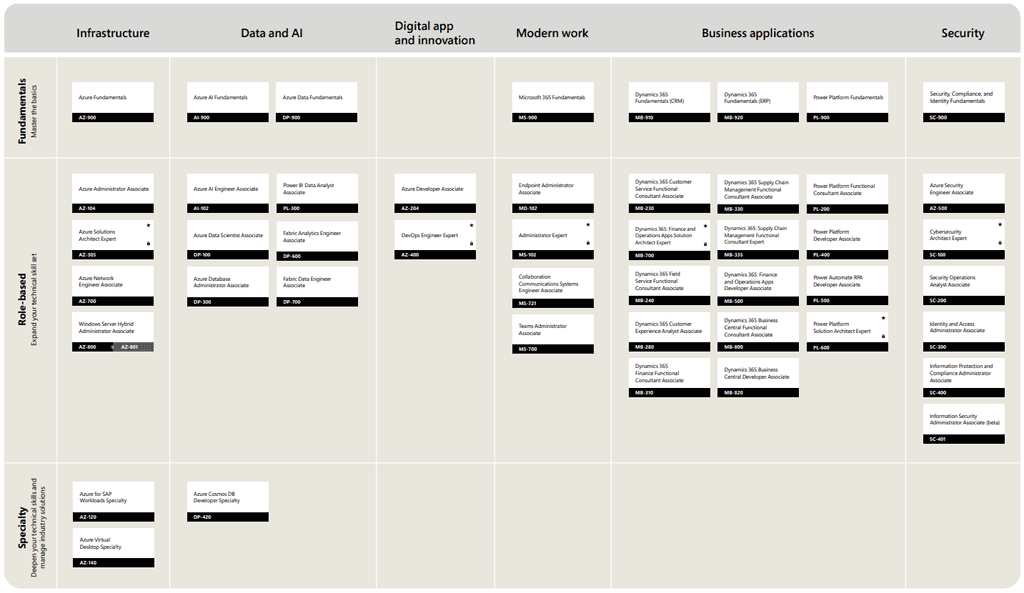

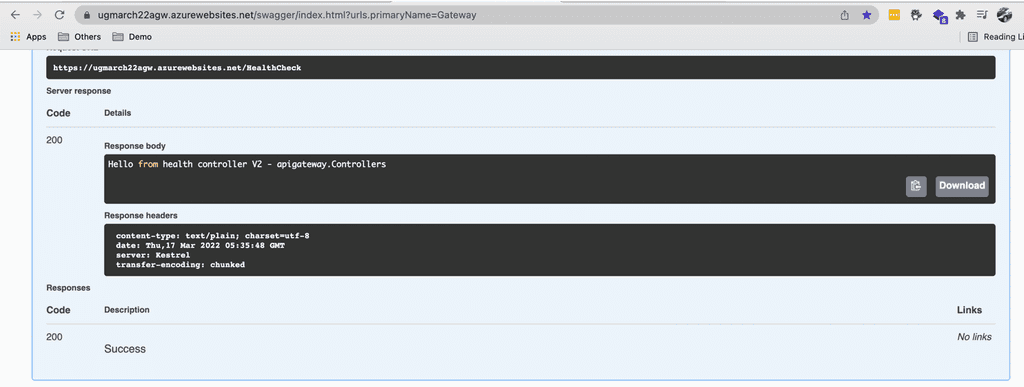

Figure: Azure Portal | Cost Analysis | View | CostByResource | Resource breakdown In Entra ID (formerly Azure AD), App Registrations are used to establish a trust relationship between your app and the Microsoft identity platform. This allows you to give your app access to various resources, such as Graph API.

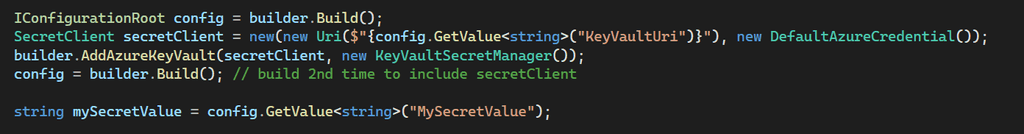

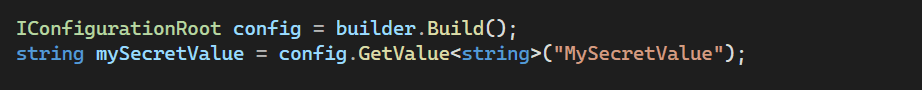

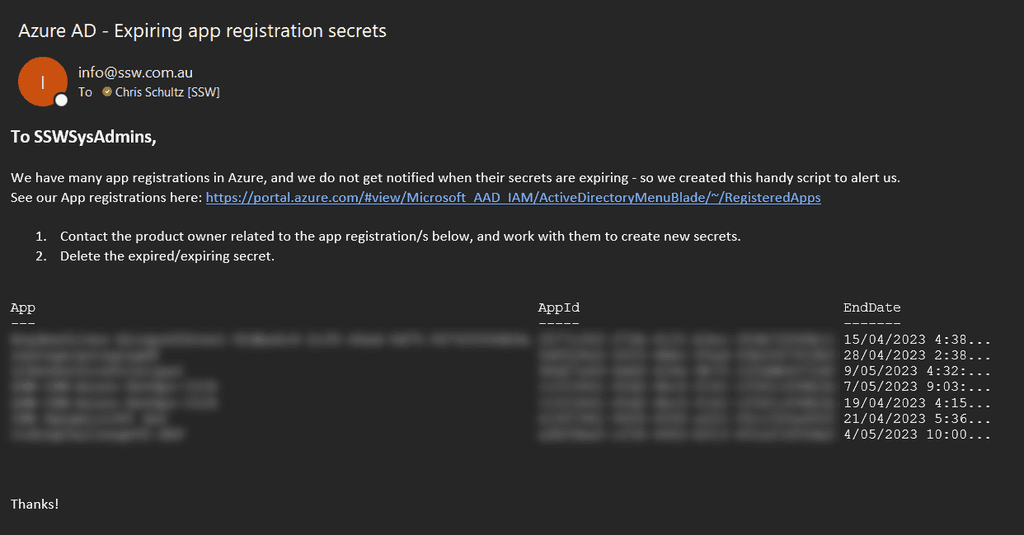

App Registrations use secrets or certificates for authentication. It is important to keep track of the expiry date of these authentication methods, so you can update them before things break.

Use a PowerShell script to check expiry dates